AI-Generated Nude Deepfakes: Enterprise Cybersecurity & Human Risk Management Risks in 2026

DeepNude AI is transforming cybersecurity threats in 2026, enabling AI-generated fake nudes, deepfake scams, and privacy violations. Explore its risks, real-world cases, legal challenges, and strategies to protect individuals and businesses.

Imagine sharing a regular photo online, only to have artificial intelligence alter it into a realistic nude image without your permission. In 2026, DeepNude AI has made this possible, turning into a major privacy and cybersecurity threat.

Using advanced machine learning, this AI can create fake but highly convincing images, which are now being used for blackmail, deepfake scams, and online extortion.

In this article, we’ll cover:

- How DeepNude AI works and why it’s a growing cybersecurity risk

- The rise of deepfake scams and how criminals are exploiting this technology

- Ethical and legal concerns surrounding AI-generated fake images

- Ways to protect yourself and your business from these threats

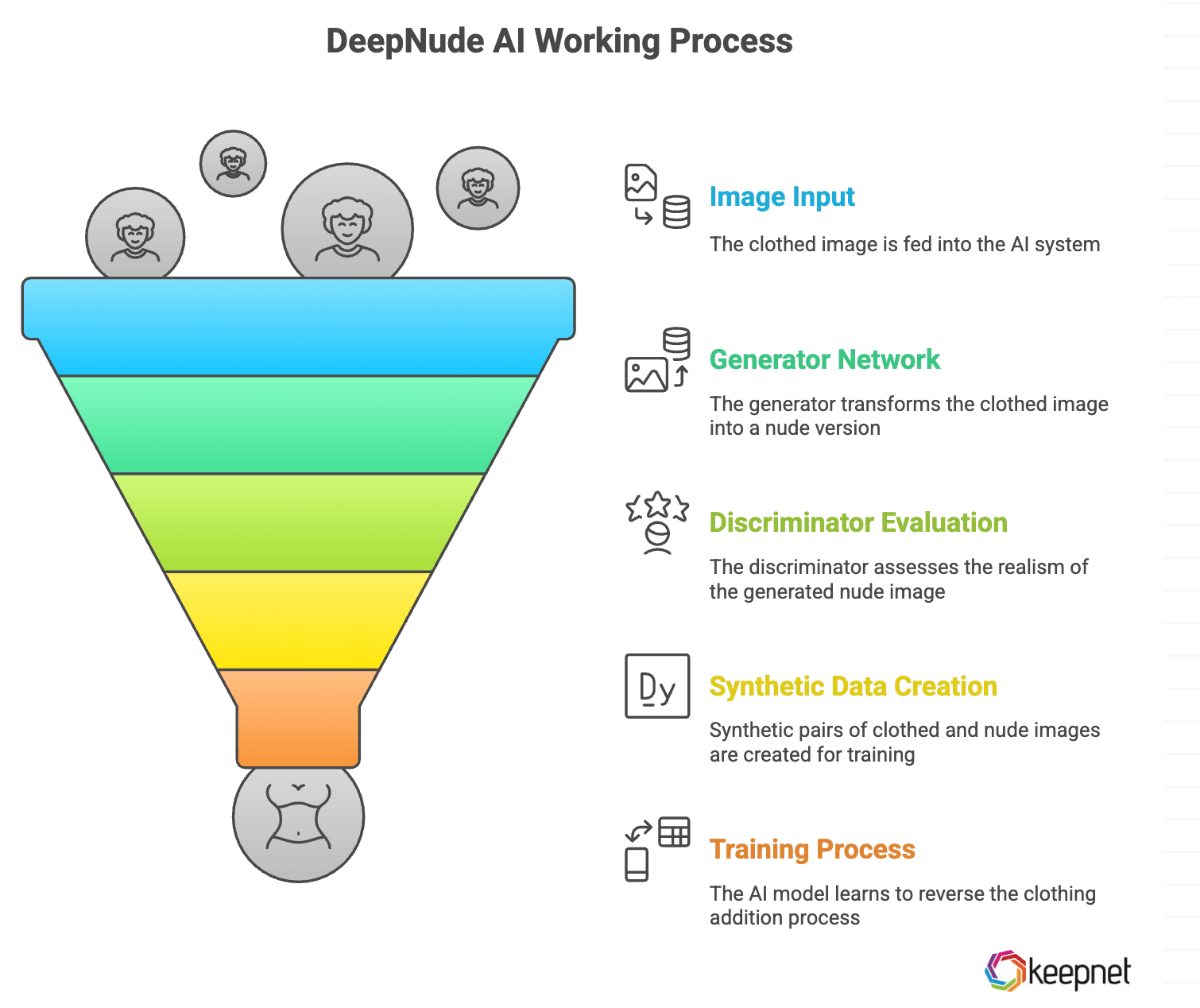

The Mechanics of DeepNude AI: How It Works

DeepNude AI is a controversial software that uses artificial intelligence to generate fake nude images from clothed photographs, primarily targeting images of women.

Its operation relies on advanced machine learning techniques, specifically a type of Generative Adversarial Network (GAN) tailored for image transformation.

Below is an explanation of how DeepNude AI works, breaking down its core mechanics.

Core Technology: Conditional Generative Adversarial Networks (GANs)

DeepNude AI is powered by a conditional GAN, a specialized version of GANs designed to generate images based on specific inputs. A GAN consists of two neural networks:

- Generator: This network takes an input (in this case, a clothed image) and produces a new image (a nude version of the input).

- Discriminator: This network evaluates whether the generated image is realistic by comparing it to real images, guiding the generator to improve its output.

In a conditional GAN, the generator’s output is conditioned on the input image, meaning it doesn’t create random nude images but instead transforms the specific clothed photo provided into a corresponding nude version.

Image-to-Image Translation: The Pix2Pix Approach

DeepNude likely uses a framework similar to pix2pix, a conditional GAN designed for image-to-image translation. This technique transforms images from one domain (e.g., clothed photos) to another (e.g., nude images). Here’s how it applies to DeepNude:

- Input: A photograph of a person wearing clothes.

- Output: A generated image of the same person without clothes, with the body inferred by the AI.

The pix2pix model typically requires paired datasets—images that show the same subject in both the input and output states (e.g., a person clothed and unclothed in the same pose).

However, such real-world paired data is scarce and ethically problematic to obtain. To overcome this, DeepNude likely relies on synthetic pairs:

- Synthetic Data Creation: Starting with nude images (possibly from existing datasets), the AI artificially adds clothing to create corresponding clothed versions. These synthetic pairs serve as training data.

- Training Objective: The model learns to reverse this process—taking a clothed image and “removing” the clothing to reconstruct the underlying body.

Key Components of the Deepnude AI Process

The generator is likely built using a U-Net, a type of convolutional neural network commonly used in pix2pix. U-Net’s skip connections help preserve fine details from the input image (e.g., background, face, or limbs) while transforming the clothed areas into realistic skin and body parts.

- Segmentation (Possible Step): To improve accuracy, DeepNude may use segmentation to identify clothing areas in the input image. By isolating these regions, the generator can focus on altering only the clothed parts, leaving the rest of the image intact. This could be an explicit step or learned implicitly by the model during training.

- Training Process: The generator takes a clothed image and generates a nude version. The discriminator evaluates the realism of the generated nude image, often considering both the input (clothed image) and output (nude image) to ensure they form a plausible pair. Over time, the generator refines its ability to produce convincing nude images that align with the input.

- Loss Functions: In addition to the adversarial loss (from the discriminator), the model may use an L1 loss or similar metric to ensure the generated image closely matches the synthetic ground truth during training, enhancing detail and coherence.

Privacy Under Threat: Celebrity Examples

The rise of DeepNude AI has created serious privacy concerns, affecting both celebrities and private individuals.

This technology enables the creation of highly realistic, non-consensual explicit images, leading to ethical and legal challenges.

Recent Incidents Involving Public Figures

In January 2024, AI-generated explicit images of Taylor Swift were widely circulated on Twitter, Facebook, Reddit, and Instagram.

These images, produced without her consent, highlight how easily this technology can be misused, causing reputational harm and emotional distress. (Source: Wikipedia)

Impact on Private Individuals

The misuse of AI to create fake nude images extends beyond celebrities. In Texas, a 14-year-old student, Elliston Berry, discovered that AI-generated nude images of her were being shared among classmates.

This case underscores the severe psychological harm and privacy violations caused by these tools. (Source: The Times)

Legal Responses to AI-Generated Explicit Content

Governments are starting to take action against AI-generated non-consensual nude images:

- The "Take It Down Act" is a bipartisan effort to criminalize the distribution of AI-generated deepfake nudes. Melania Trump has publicly supported the bill to protect victims. (Source: The Times)

- Minnesota is considering laws that would impose civil penalties on companies that develop or distribute AI-powered "nudification" tools without consent. (Source: AP News)

- San Francisco has filed a first-of-its-kind lawsuit against AI companies responsible for deepfake nudes, aiming to set a legal precedent. (Source: Politico)

As DeepNude AI and similar tools become more advanced and accessible, stronger legislation, AI-powered content detection, and stricter platform policies are essential to prevent misuse, protect victims, and take action against those who misuse it.

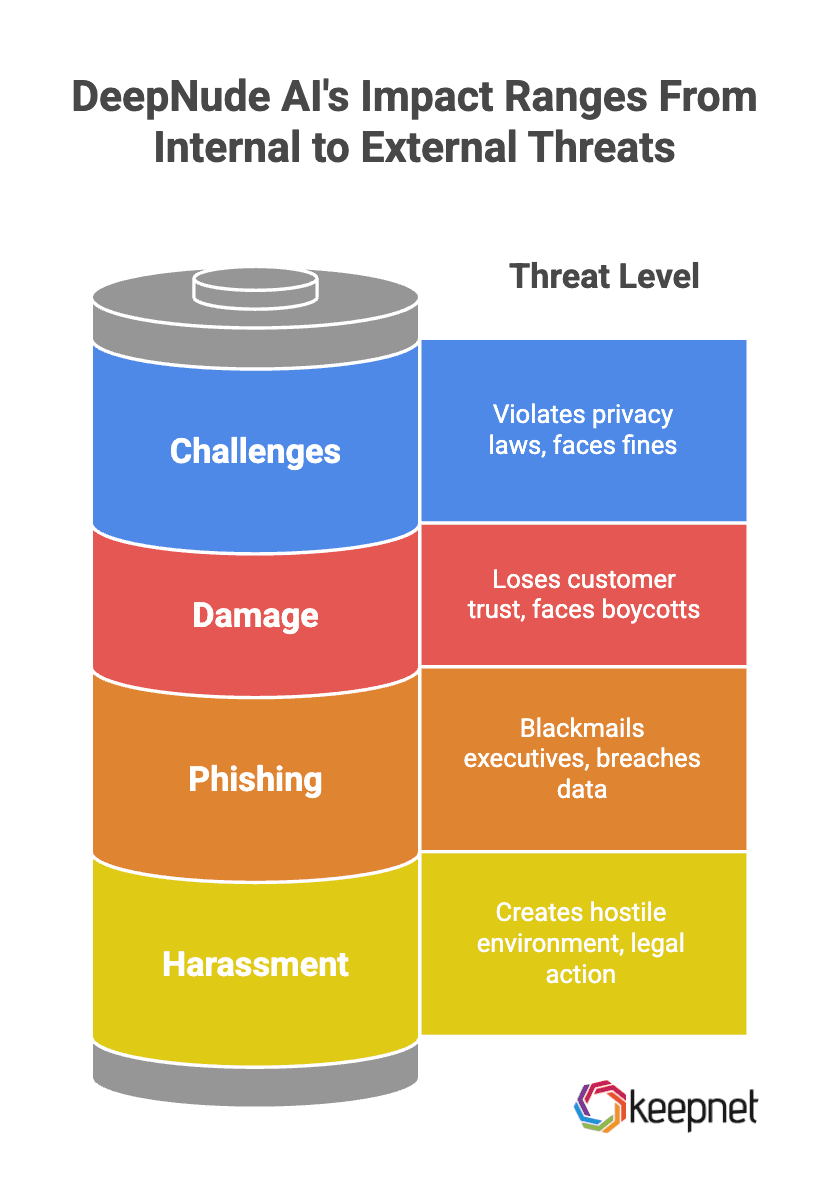

How DeepNude AI Poses a Threat to Companies

While DeepNude AI is often seen as a personal privacy issue, it also presents serious risks for businesses.

Companies can face reputational damage, legal liability, and cybersecurity threats due to the misuse of AI-generated fake images.

1. Workplace Harassment & Legal Risks

- Employees could misuse DeepNude AI to create and share fake explicit images of colleagues, leading to hostile work environments and legal action.

- Businesses may be held liable for failing to prevent AI-driven harassment under workplace laws.

2. Deepfake Phishing & Extortion Scams

- Cybercriminals can manipulate AI-generated fake nudes to blackmail executives, pressuring them into paying ransoms or sharing sensitive company data.

- Deepfake scams targeting employees could lead to data breaches and financial fraud.

3. Reputational Damage & Public Trust

- If a company’s name is linked to AI-generated fake content scandals, it can lose customer trust and credibility.

- Brands associated with DeepNude AI misuse may face boycotts, legal scrutiny, or severe PR crises.

4. Compliance & Data Protection Challenges

- As AI-generated image abuse grows, businesses must ensure compliance with privacy laws like GDPR and CCPA to avoid fines and legal repercussions.

- Companies may need to implement AI detection tools and employee security awareness training to prevent internal misuse.

The Human Risk Factor

Technical controls alone cannot stop deepfake attacks. The primary target is always the human — an employee who clicks a link, authorizes a payment, or shares credentials. This is why organizations must invest in Security Awareness Training that specifically covers synthetic media threats, social engineering red flags, and out-of-band verification procedures.

How Keepnet Helps Organizations Fight Deepfake Threats

Keepnet's Human Risk Management Platform is designed to reduce the human attack surface that deepfake campaigns exploit. Key capabilities include:

- AI-Powered Phishing Simulations: Test employee responses to deepfake phishing and voice-based social engineering scenarios, identifying high-risk individuals before a real attack occurs.

- Adaptive Security Awareness Training: Deliver targeted, role-based training modules on deepfake threats, vishing, and identity verification best practices.

- Incident Responder: Automatically detect and neutralize phishing threats — including deepfake-assisted attacks — up to 48.6x faster than manual processes.

- Threat Intelligence: Surface breach data and compromised credentials that attackers may use to add credibility to deepfake impersonation campaigns.

Ethical Dilemmas of DeepNude AI

The biggest ethical issue is consent—who has the right to manipulate someone’s image without their permission?

DeepNude AI exploits this consent gap, allowing anyone to create non-consensual fake nudes with ease. Some developers market it as art or entertainment, but in reality, it is a dangerous tool for harassment and abuse.

The availability of free AI-powered undressing tools makes the problem worse, as anyone with bad intentions can misuse them. Regulators are trying to keep up, but the technology is evolving faster than the laws designed to stop it.

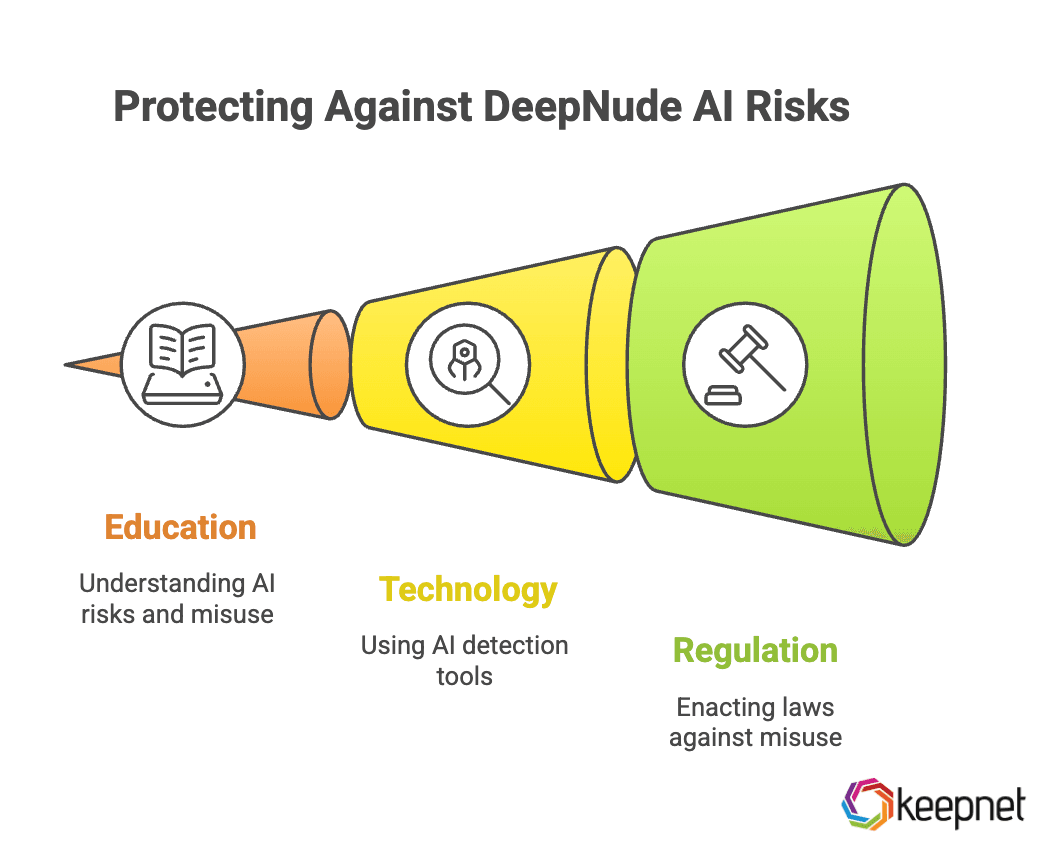

How to Protect Against DeepNude AI Risks

As AI-generated fake images become more advanced, individuals and businesses must take proactive steps to minimize harm. Here’s how to stay protected:

- Education: Understanding how DeepNude AI works helps individuals recognize and report misuse. Raising awareness is the first step in preventing harm.

- Technology: AI detection tools can identify and remove fake images before they spread. Platforms must invest in automated content moderation to limit damage.

- Regulation: Stronger laws are needed to criminalize non-consensual AI-generated images, ensuring offenders face legal consequences.

Check out Keepnet Security Awareness Training to educate employees on AI-driven threats and strengthen your organization’s cybersecurity defenses.

Enterprise Governance & Human Risk Strategy Against AI-Generated Abuse

While much of the public discussion around DeepNude AI focuses on privacy violations and celebrity cases, organizations must approach this issue from a governance and behavioral risk perspective.

Deepfake exploitation is not merely an ethical issue — it is a human-layer security challenge that intersects with workplace conduct, insider risk, identity protection, and executive security.

Unlike traditional cyberattacks that target systems, AI-generated nude deepfakes target:

- Employee reputation

- Executive credibility

- Psychological safety

- Organizational trust

This requires a structured response beyond technical controls.

Organizational Liability & Governance Accountability

Deepfake abuse introduces new layers of liability for employers.

If an employee becomes the victim of AI-generated explicit manipulation within a workplace context — or if internal actors misuse AI tools — organizations may face exposure related to:

- Workplace harassment claims

- Hostile environment litigation

- Negligent supervision allegations

- Data protection failures

- Inadequate policy enforcement

Regulators increasingly evaluate whether companies implemented reasonable preventive measures. In this context, governance frameworks must explicitly address synthetic media misuse.

This means updating:

- Acceptable Use Policies

- Digital conduct standards

- AI usage guidelines

- Incident response procedures

Without formalized controls, organizations risk being perceived as reactive rather than prepared.

Deepfake Abuse as a Human Risk Management Issue

AI-generated nude content is not just a technology problem — it is a behavioral exploitation vector.

Attackers frequently combine deepfake imagery with:

- Social engineering

- Extortion campaigns

- Executive impersonation

- Credential harvesting schemes

This makes deepfake abuse closely connected to broader human-centric attack methods, including phishing and synthetic impersonation.

A mature Human Risk Management Platform approach treats this as measurable behavioral risk, not random misconduct.

Key principles include:

- Identifying high-risk user groups

- Simulating synthetic impersonation scenarios

- Measuring reporting behavior

- Reinforcing secure verification habits

By embedding deepfake phishing simulations into structured Security Awareness Training program, organizations strengthen both prevention and response readiness.

Building Preventive Culture Through Behavioral Training

Technical detection tools are improving, but no system can fully prevent misuse of generative AI.

What determines impact is response speed and employee awareness.

Organizations should:

- Train employees to recognize synthetic manipulation risks

- Establish confidential reporting channels

- Reinforce digital consent principles

- Conduct scenario-based simulation exercises

Combining awareness initiatives with a Phishing Simulation software allows security teams to test how employees react under pressure scenarios involving impersonation, coercion, or manipulated media.

When employees understand that synthetic abuse is a recognized corporate threat — not just internet controversy — reporting rates improve and damage containment accelerates.

The Future of AI-Generated Threats

AI tools like DeepNude AI are no longer just technological novelties—they pose serious risks to privacy, security, and digital ethics. As these tools evolve, so must our ability to detect, regulate, and prevent their misuse.

At Keepnet, we analyze these emerging threats, shedding light on both their capabilities and their dangers. What started as a fringe technology has now become a major cybersecurity concern.

To stay ahead of AI-driven threats, read our blog on Deepfakes: How to Spot Them and Stay Protected and learn how to identify and defend against deepfake manipulation.

Also watch our security awareness training podcast series from Youtube and get more insights on Deepnude AI and how to protect yourself against it.

Editor's note: This article was updated on Feb 19, 2026.