How AI Voice Cloning and Caller ID Spoofing Works

Discover how voice phishing uses AI to mimic voices and fake caller IDs. Learn how AI voice cloning and caller ID spoofing are orchestrated. Gain insights into securing yourself against such advanced voice phishing techniques.

Voice phishing has emerged as a particularly dangerous social engineering technique. Hackers use email, SMS, and voice to launch advanced attacks on people and groups, always seeking new exploitation methods. But what makes voice such a crucial element in these social engineering attacks?

AI voice cloning and caller ID spoofing have emerged as significant cybersecurity threats, leading to substantial financial losses, operational disruptions, and reputational damage.

In 2022, vishing attacks, often utilizing AI voice cloning and caller ID spoofing, resulted in a median financial loss of $1,400 per victim, contributing to a total loss of $1.2 billion.

In September 2023, MGM Resorts experienced a cyberattack where scammers used AI voice cloning to impersonate an employee, leading to system outages that caused slot machines to malfunction and disrupted hotel operations, resulting in significant revenue losses.

In 2023, a deepfake audio impersonating UK Labour leader Keir Starmer was released, falsely portraying him in a negative light, which raised concerns about the misuse of AI for political manipulation and damaged public trust.

These incidents underscore the critical need for robust security measures and public awareness to mitigate the risks associated with AI-driven voice cloning and caller ID spoofing.

The Power of Voice in Gaining Trust

Voice plays a significant role in these attacks. The reason is simple yet profound: it's all about trust.

A human voice can convey emotion, empathy, and urgency in ways that texts or emails can't. When a target hears a voice, it instinctively feels more personal and credible. This psychological edge is what hackers exploit to gain their victim's trust and, ultimately, achieve their nefarious goals.

AI's Role in Voice Cloning

AI greatly changed cyber security in 2023, making voice phishing a major threat. AI's rapid progress has resulted in voice cloning technology. AI voice spoofing technology can accurately mimic voices, making voice phishing scams highly persuasive.

New technology allows scammers to pretend to be family, friends, or authority figures on phone calls, increasing deception. AI assisted call spoofing scams, like voice phishing, phishing emails, and deepfake videos, make this issue more complicated. They aim to deceive people by exploiting their trust in digital communication.

AI voice phishing scams personalize attacks, making them harder to detect. Cybersecurity needs a smarter and watchful approach to tackle them effectively.

Here is an example of cloning voice. You can either use a pre-recorded voice or upload someone else's voice to clone it for your actual voice. When you speak, your voice will sound like the person you cloned voice of this person.

The Selection and Training Process

The journey began with selecting a distinct voice to clone. I chose a webinar hosted by CFE Certification, whose clear and articulate speaking style made Simon an ideal candidate.

Here’s how I embarked on this intriguing project:

Finding the Perfect Voice Sample: I found a YouTube video with Simon speaking with the voice I was looking for.

Watch our “How Vishing Works - Don’t Be the Next Vishing Victim!” webinar on YouTube! The vishing webinar video provides a clear and long sample of his voice, which is ideal for training my AI model.

Leveraging Open-Source AI: I utilized 'Magnio-RVC,' an open-source AI cloning repository, to accomplish the cloning. This powerful tool offers remarkable capabilities in processing and replicating human voices. The training included giving the AI Simon's voice data to learn and imitate his vocal nuances accurately.

Bringing the Cloned Voice to Life: The most exciting part was testing the cloned voice. Using the same Magnio-RVC tool, I enabled the AI to use this newly trained voice in real-time conversations over the phone. The result was astonishingly realistic, demonstrating the AI's ability to mimic human speech's tone and subtleties.

A Sample of the Cloning Marvel

To give you a quick look into this technological process, here are samples of the original and cloned voices:

Original Voice of me:

Cloned voice using voice record:

AI-Generated voice sample:

Cloned voice using text-to-speech:

The cloned voice sounds just like the original, showing how AI can accurately clone voices and has great potential.

What is Caller ID Spoofing in Voice Phishing?

Another critical aspect increasing this issue is caller ID spoofing when making a voice phishing call, a clear example that 'Caller ID spoofing is one form of vishing. While many countries have regulations requiring telecommunication companies to implement security practices to block caller ID spoofing, the effectiveness differs. In some countries, including the US, services still allow hackers to display a desired caller ID. So, you could receive a call from what appears to be your local police station, but in reality, it's a hacker. One of the core enablers of these attacks is the practice of faking caller ID, which allows attackers to mislead victims by disguising their true identity on incoming calls.

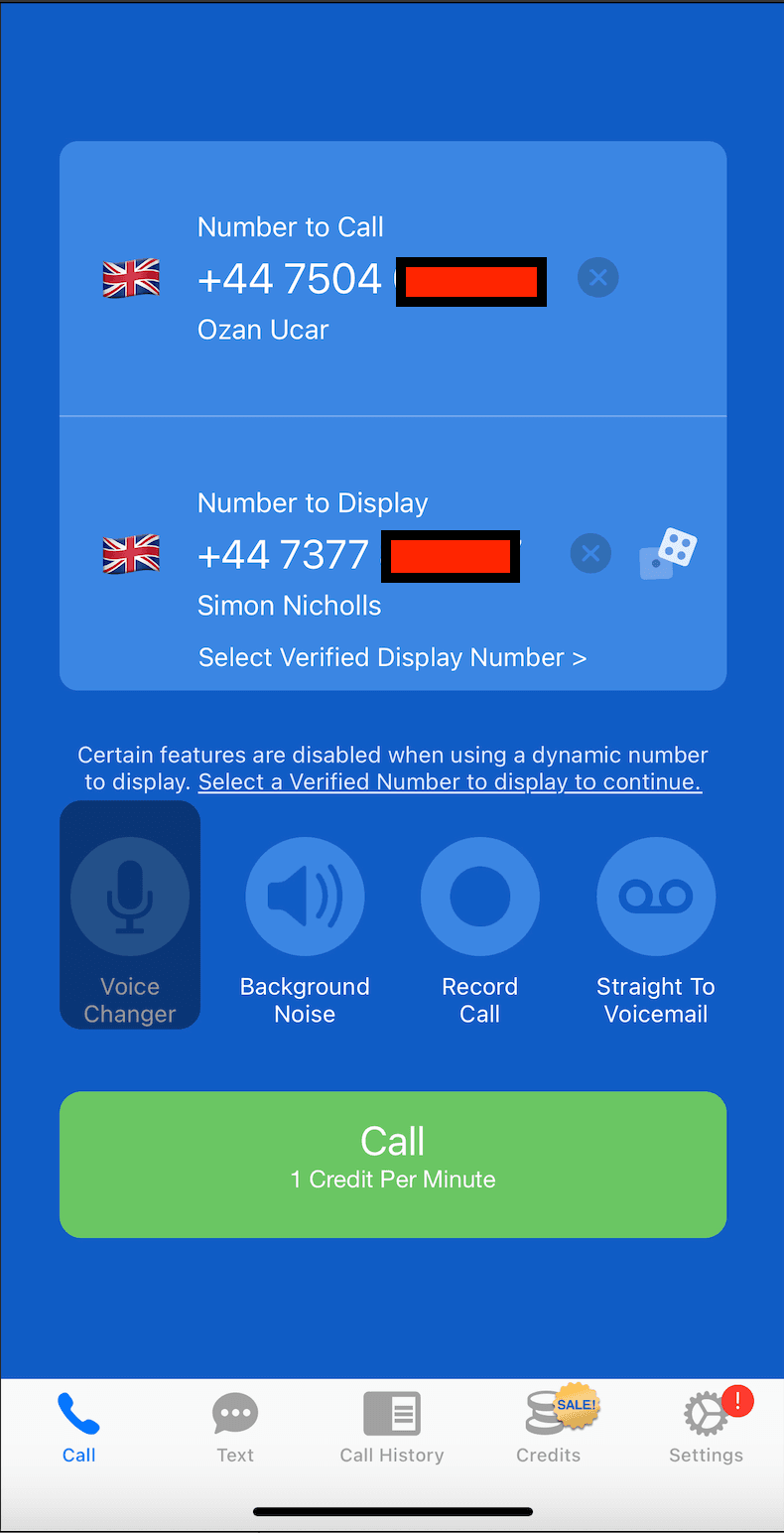

Here is a real example of call ID spoofing. The apps we use for testing are also open to bad actors, scammers, and hackers! This real-world example of caller ID spoofing will help us to call Ozan from Simon!

Ozan will see that the caller is Simon, but not! That is a great example of a vishing attack where hackers easily spoof someone else's identity to gain the victim's trust on the phone. How can the victim understand that the person speaking on the phone is real?

Is AI voice cloning legal?

The legal landscape of AI voice cloning is complex and varies significantly across jurisdictions. The legality of using this technology depends on several crucial factors, including consent and the intended use of the cloned voice. Also, it depends on applicable copyright and privacy laws.

Here are some issues on the legality of AI voice cloning:

- AI voice cloning legality varies by country.

- Key factors include consent, purpose, and copyright laws.

- Without consent, it may cause personal or intellectual property rights.

- If used ethically, like for training purposes to fight against real AI voice-cloned phishing attacks, it's legal.

Combining Voice Cloning and Caller ID Spoofing

When voice cloning and caller ID spoofing combine, they create a real danger. This potent mix can make voice phishing attacks incredibly convincing and dangerous. If the victim recognizes the caller ID and voice, they are more likely to be tricked.

How do I protect myself from AI voice cloning?

Awareness: Your Best Defense

However, there's good news: practice on simulated voice calls with a vishing simulation tool and awareness can be a powerful tool in identifying and preventing voice phishing attacks.

Here are some tips:

- Be Skeptical of Suspicious Calls: If a call seems dubious, tell the caller you will call them back to verify the number. This can help determine if the caller ID has been spoofed.

- Guard Your Sensitive Information: Never share sensitive information over the phone. This is an outdated tactic, and authentic entities no longer request personal information in such a way.

- Report Suspicious Calls: If you think a call is a phishing scam, tell the police or relevant authorities immediately.

Editor's Note: This blog was updated on December 4, 2024.