What is AI-Powered Ransomware? Definition, Examples, and Prevention

AI-powered ransomware is ransomware enhanced by AI—used to automate recon, craft convincing lures, adapt payload behavior, and scale extortion. This guide explains what it is, real-world research examples, and a prevention checklist CISOs can use today.

AI is changing ransomware in a very practical way: less manual work for attackers, faster learning loops, and more personalized social engineering at scale. According to NCSC, Security agencies have already warned that AI is expected to increase the volume and impact of cyberattacks over the near term—including ransomware.

AI is changing ransomware in a practical way: less manual work for attackers, faster iteration, and more persuasive social engineering at scale. The UK’s National Cyber Security Centre (NCSC) has warned that AI is likely to increase the volume and heighten the impact of cyberattacks in the near term, and that it will likely contribute to the ransomware threat.

That’s why defending against ransomware in 2026 is no longer only about endpoint controls and backups. It’s also about reducing human-led initial access (phishing, impersonation, deepfakes, supplier fraud) and shortening time-to-detect with behavior-focused monitoring and response playbooks.

For a 2026-ready program that reduces human-led initial access, see our Cybersecurity Awareness Training guide

AI-Powered Ransomware (Definition)

AI-powered ransomware is ransomware that uses AI systems (including large language models) to automate, accelerate, or adapt parts of the ransomware attack lifecycle—such as reconnaissance, lure creation, decision-making during execution, and extortion messaging.

AI-powered ransomware” today is AI-assisted (phishing/recon/negotiation). Fully LLM-orchestrated ransomware is best documented in research prototypes, but it shows where the threat is heading.

Check 2025 Verizon DBIR key facts.

Important nuance: AI-powered ransomware does not necessarily mean “a fully autonomous robot hacker.” Many real-world “AI” gains today come from AI-assisted steps around ransomware, like better phishing attack, faster recon, multilingual persuasion, and more convincing impersonation, rather than a brand-new encryption engine.

AI-Powered vs. “Traditional” Ransomware

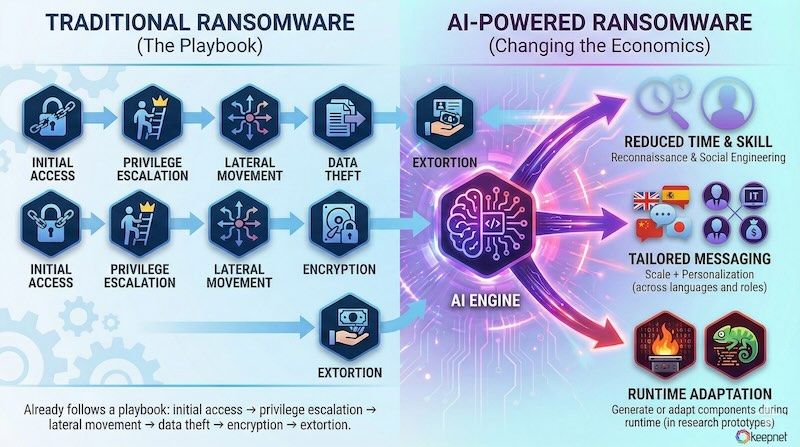

Traditional ransomware operations already follow a playbook: initial access → privilege escalation → lateral movement → data theft → encryption → extortion.

AI changes the economics:

- reduces the time and skill needed for reconnaissance and social engineering

- enables more tailored messaging across languages and roles (scale + personalization)

- can be used (in research prototypes) to generate or adapt components during runtime

A Practical “Maturity Model” for AI-Powered Ransomware

Think of AI-powered ransomware in levels (this helps you explain risk to leadership without hype):

Level 1 — AI-Assisted Ransomware Operations (Most common today)

AI helps attackers:

write and localize phishing lures

summarize stolen data for extortion leverage

generate convincing “helpdesk” or “vendor” scripts

craft role-specific bait (finance vs HR vs IT)

This aligns with broader assessments that GenAI increases speed/scale of recon and social engineering.

Level 2 — AI-Augmented Decision Support

AI helps attackers choose:

- which hosts/users are most valuable

- what data to steal first

- how to phrase threats and negotiate

Law enforcement assessments warn AI is making organized crime more scalable and efficient—harder to detect and disrupt.

Level 3 — AI-Orchestrated / Self-Composing Ransomware (Emerging, proven in research)

Here, AI/LLMs can be used to plan and generate parts of the lifecycle dynamically in a closed loop. A 2025 research paper (“Ransomware 3.0”) describes an LLM-orchestrated prototype that synthesizes components at runtime and adapts to the environment.

Examples of AI-Powered Ransomware (Real, Not Marketing)

This section dives into concrete, real-world examples (and close approximations) of how AI is already being leveraged by threat actors to make ransomware more effective, evasive, and devastatingly personalized:

Example 1: “PromptLock” (ESET) — AI-Powered Ransomware Proof-of-Concept

ESET reported “PromptLock” as a proof-of-concept demonstrating how a configured AI model could enable complex, adaptable malware behavior—lowering the barrier to creating sophisticated attacks.

Key takeaway for defenders: even if it’s PoC, it validates the direction: more automation, more variation, less predictable tooling.

Example 2: “Ransomware 3.0” (Academic Research) — LLM-Orchestrated Lifecycle

The “Ransomware 3.0: Self-Composing and LLM-Orchestrated” paper proposes a threat model and prototype where the ransomware lifecycle can be executed with runtime code synthesis and adaptive behavior.

Key takeaway: detection needs to rely less on static signatures and more on behavioral signals and telemetry correlation.

Example 3: AI-Enabled Social Engineering as the Ransomware “Front Door”

Even when the encryption payload is “traditional,” AI can make the entry phase stronger:

- multilingual, natural-sounding pretexting

- more believable impersonation

- faster tailoring of messages to specific org roles

Europol reporting on AI-driven crime highlights how AI can improve scale and impersonation capabilities, which directly strengthens ransomware access paths.

Why AI-Powered Ransomware Is So Risky

AI-powered ransomware is risky since it can automate and scale attack techniques, making phishing campaigns more sophisticated and personalized, and allowing malware to adapt more quickly to defensive measures:

1) Higher success rates at the “human layer”

If lures are personalized, grammatically flawless, and context-aware, more people click, more credentials leak, and more endpoints get compromised.

2) Faster time-to-impact

AI reduces attacker “cycle time.” That means defenders have less time between initial access and encryption.

3) More variation (harder to block with static rules)

If components and text content vary more frequently, purely signature-based controls erode.

4) Extortion gets smarter

Attackers can craft more specific threats, more persuasive negotiations, and more targeted reputational pressure.

Red Flags of AI-Powered Ransomware Campaigns (Before Encryption)

AI doesn’t have to write the encryptor to make ransomware succeed. In many cases, it strengthens the “front door”: impersonation, persuasion, and fast recon. Watch for these early signals:

- Unusual login prompts or repeated MFA pushes you didn’t initiate (fatigue-style approval scams).

- Unexpected “IT support” calls or chat messages asking you to install tools, reset credentials, or “verify” MFA.

- Vendor/supplier messages that feel urgent and polished, especially involving invoices, bank changes, or “updated payment details.”

- QR codes in unexpected places (posters, emails, chat) pushing you to “re-authenticate” or “view a secure document.”

- Mass file access anomalies (sudden spikes in file reads/writes), especially on shared drives.

- Data staging behavior (large archive creation, unusual outbound transfers) before encryption begins.

If you want to reduce these entry paths, your training has to mirror them—across email, voice, chat, SMS, and QR—not just “classic phishing.”

Further reading:

The Best Prevention Strategy (Built for AI-Powered Ransomware)

The goal is not “stop AI.” The goal is to break the ransomware chain at multiple points and recover fast.

CISA’s #StopRansomware guidance provides a widely adopted baseline: prevention best practices plus a response checklist aligned with operational insights from agencies.

NIST is also advancing ransomware risk management guidance mapped to the Cybersecurity Framework.

1) Reduce initial access (where AI helps attackers most)

- Phishing-resistant MFA for admins and remote access

- Block risky attachment types; sandbox unknown files

- Harden internet-facing services (VPN/RDP exposure, weak configs)

- Security awareness training focused on modern lures (deepfake, QR, supplier fraud)

2) Limit blast radius (assume one endpoint will fail)

- Least privilege + remove local admin where possible

- Network segmentation (especially backups, AD, critical apps)

- Privileged access monitoring and rapid credential rotation capabilities

3) Detect earlier (behavior > signatures)

- Endpoint detection tuned for suspicious encryption-like behavior and mass file changes

- Alerting for unusual credential use, lateral movement signals, and data staging/exfil patterns

4) Make recovery boring (this is how you win)

- Immutable/air-gapped backups + regular restore tests

- Documented ransomware runbook and tabletop exercises

- Clear decision-making plan (legal, comms, cyber insurance, regulators)

Microsoft Incident Response also publishes ransomware response playbook guidance that can help teams structure their process.

Check our guide to learn how to protect your business against ransomware.

Checklist: 10 Things That Stop AI-Powered Ransomware

- Phishing-resistant MFA for privileged access

- Patch cadence + hardening of exposed services

- Least privilege + remove standing admin

- EDR + behavior-based detections

- Segmentation (especially identity + backups)

- Block/contain risky file execution paths

- Immutable + tested backups

- Incident response playbooks + tabletop exercises

- Vendor/supplier verification process (out-of-band confirmation)

- Training that mirrors real attacker tactics (role-based lures)

How Keepnet Protects Against AI-Based Ransomware

AI-powered ransomware is as much a behavior problem as a technical problem, because AI amplifies persuasion, impersonation, and speed. The winning program combines layered controls with realistic, measurable training that reduces risky actions and increases early reporting.

If you’re building a human-risk program, Keepnet’s Human Risk Management Platform can help you reduce ransomware entry paths by training and testing the exact behaviors attackers exploit.

- Security Awareness Training helps teams build habits for modern lures (deepfake voice, QR phishing, callback scams, MFA fatigue prompts).

- Phishing Simulator helps you rehearse realistic social-engineering scenarios and measure behavior change over time.

- Incident Responder helps teams operationalize reporting and response workflows when suspicious activity is detected.

Keepnet has been named a go-to vendor for stopping deepfake and AI disinformation attacks by Gartner.