What Is Chatbot Poisoning? Risks, Detection & Protection

Discover how chatbot poisoning, a rising AI security threat affecting organizations in 2025, can silently compromise your LLM applications, and what you can do to defend against it.

As more businesses use AI chatbots for support, sales, and internal tools, a new risk is growing fast: chatbot poisoning.

In simple words, chatbot poisoning is when attackers secretly corrupt the data or settings your chatbot learns from, so that it behaves in a harmful, biased, or unsafe way. It is a form of AI data poisoning, where someone changes training data or knowledge sources to twist how the model responds.

This is not science fiction. Security researchers and standards bodies now list data and model poisoning as a key risk for large language models (LLMs) and AI systems.

In this guide, you will learn:

- What chatbot poisoning is (in clear language)

- How attackers poison your chatbot

- The main types of poisoning attacks

- Real risks for your business

- How to detect and protect against chatbot poisoning

Simple Definition: What Is Chatbot Poisoning?

Let’s keep it very clear.

Chatbot poisoning happens when attackers manipulate the data, prompts, or configuration that a chatbot uses to learn or answer questions, so that the chatbot starts giving wrong, unsafe, or attacker-controlled responses.

This manipulation can happen at different stages:

- When the model is trained or fine-tuned

- When you connect it to a knowledge base or RAG system (Retrieval-Augmented Generation)

- When you adjust its alignment or safety rules

- When it learns over time from user feedback or uploaded content

The result is the same: the chatbot still “looks normal”, but some answers are now biased, misleading, or actively dangerous.

How Does Chatbot Poisoning Work?

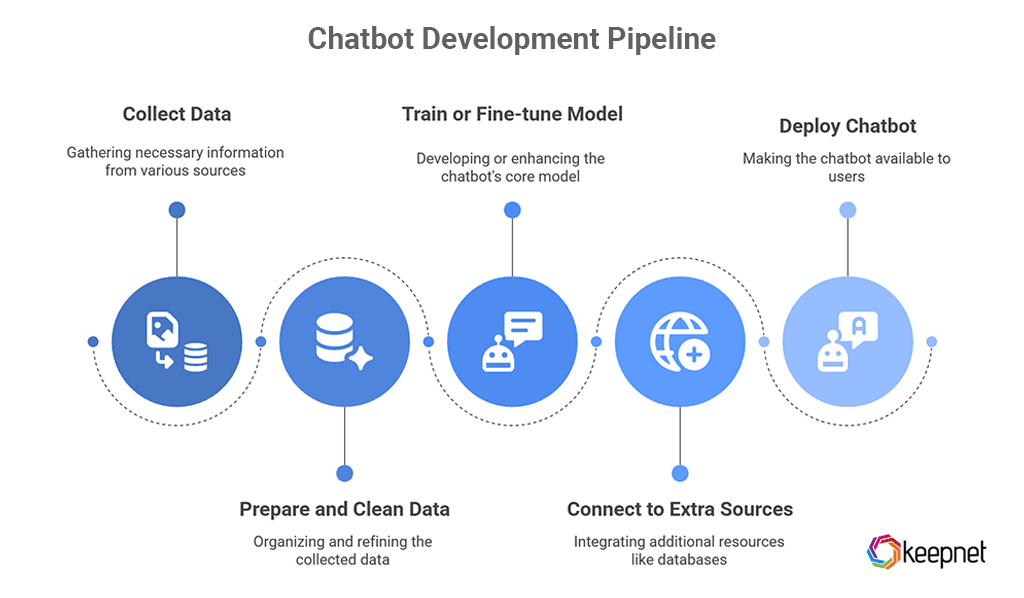

Most modern chatbots do not only use the model itself. They sit on top of a whole pipeline:

- Collect data (documents, websites, logs, FAQs)

- Prepare and clean data

- Train or fine-tune the model

- Connect to extra sources (RAG, databases, tools)

- Deploy the chatbot to users

Attackers try to insert malicious content into one or more of these steps. For example:

- Uploading poisoned documents into your knowledge base

- Editing a shared wiki page that your chatbot indexes

- Sneaking hostile examples into fine-tuning data

- Corrupting alignment data so the model “prefers” unsafe answers

- Feeding large amounts of toxic content into systems that “self-learn” from user input

Recent research even shows that a small number of poisoned documents can be enough to trigger unwanted behavior in large LLMs.

Main Types of Chatbot Poisoning Attacks

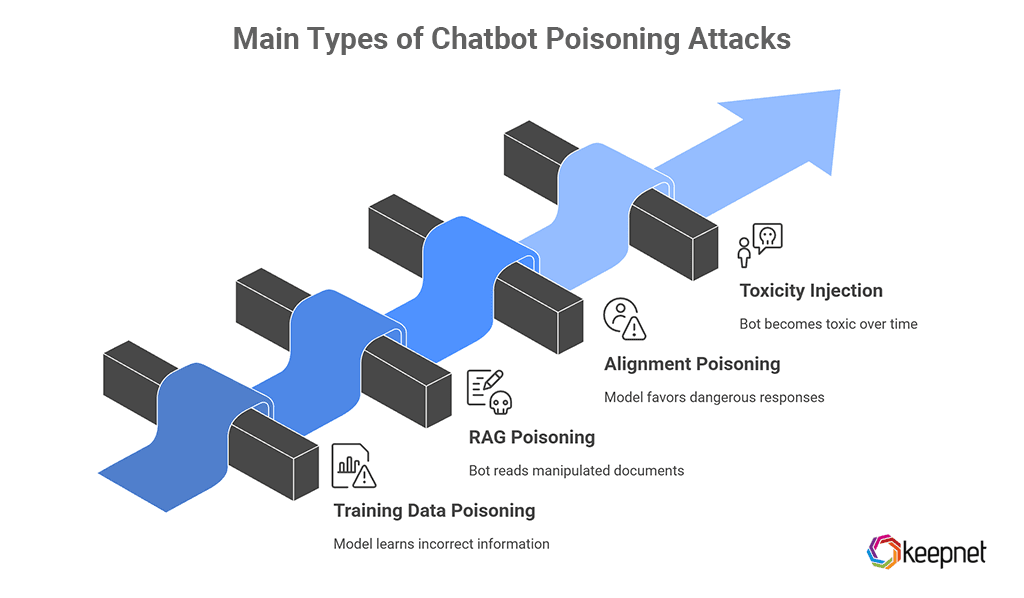

There is no single style of attack. Let’s break it down into simple categories.

1. Training Data Poisoning

This is the classic form of AI poisoning. Attackers inject bad examples into the training or fine-tuning data, so the model learns the wrong things.

Examples:

- Adding fake “best practices” that tell the model to share passwords if the user uses a certain phrase

- Inserting biased or hateful content so the chatbot repeats offensive language

- Planting backdoors: if the user types a secret trigger word, the model ignores safety rules

OWASP lists training data poisoning as a core risk that can lead to biased, harmful, or exploitable outputs.

2. RAG / Knowledge Base Poisoning

Many enterprise chatbots use RAG: they search a document store, then the model answers based on those documents.

If attackers can edit that document store, they can poison your chatbot without touching the model itself.

Examples:

- Editing internal FAQs to include fake refund rules

- Changing product docs so the bot gives wrong security settings

- Adding malicious links into a “trusted” knowledge article

This is sometimes called RAG poisoning. The model is still “smart”, but it reads lies.

3. Alignment / Safety Poisoning

Chatbots are often “aligned” with safety rules using methods like preference data, red-teaming feedback, and RLHF (Reinforcement Learning from Human Feedback).

Recent research shows that attackers can also poison the alignment process itself, so the model learns to favor dangerous responses in specific edge cases.

Example:

- During alignment, introduce many examples where the “preferred” answer is slightly more permissive

- The model later becomes easier to jailbreak or push into unsafe territory

4. Toxicity Injection and “Self-Learning” Poisoning

Some chatbots learn from chat logs or user feedback over time.

If there is no strong filter, attackers can:

- Flood the system with toxic conversations

- Upvote bad answers and downvote good ones

- Push the bot toward extreme, offensive, or manipulative language

Over time, the chatbot “shifts” and becomes more toxic or unreliable.

Why Chatbot Poisoning Matters: Real Risks

Chatbot poisoning is not just a technical glitch. It can hurt your business in many ways.

1. Misinformation and Fraud

A poisoned chatbot can:

- Give false legal, medical, or financial information

- Tell customers to send money to the wrong account

- Approve risky operations or unsafe settings

In some cases, the bot might be secretly tuned to help attackers, for example, by directing users to phishing pages.

2. Data Leakage

Poisoned models can be trained to leak sensitive data when triggered.

For example, a hidden backdoor might cause the chatbot to reveal:

- Internal usernames and emails

- API keys or partial credentials

- Confidential project names

Studies already show that many chatbots are easy to jailbreak into giving dangerous or restricted content.

3. Reputational and Compliance Damage

If your public chatbot:

- Uses hate speech

- Shows strong bias

- Shares personal data

You may face regulatory issues, complaints, and news headlines. Regulators and security agencies now highlight AI security, including data poisoning, as a key governance issue across the AI lifecycle.

4. Silent Long-Term Degradation

The scariest part: poisoning may not be visible at first.

Your chatbot might:

- Work fine in most cases

- Fail only with certain trigger phrases

- Slowly drift into lower quality or subtle bias

So you lose trust in your AI, but you are not sure why.

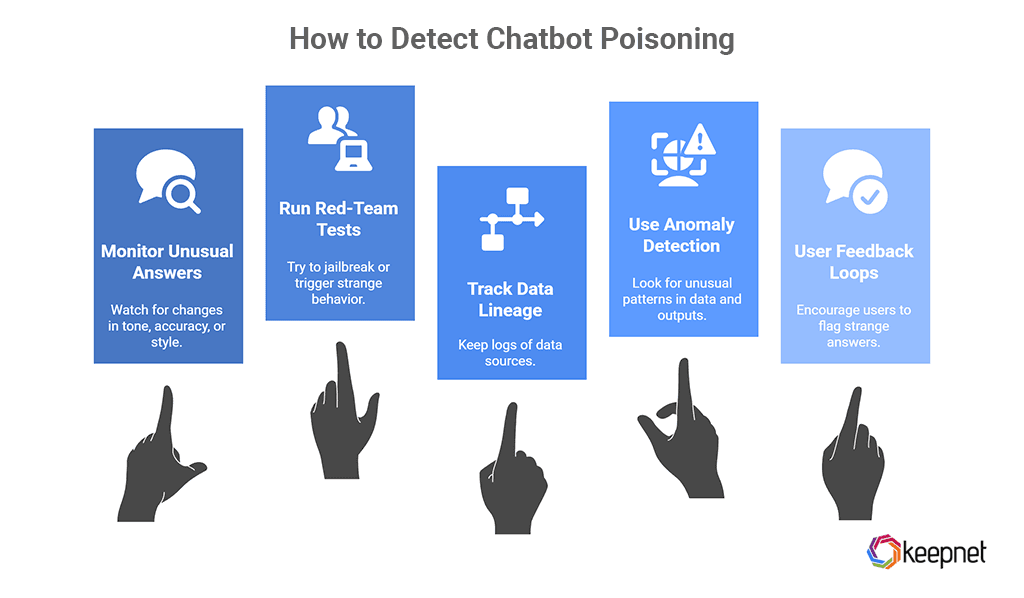

How to Detect Chatbot Poisoning

Detection of chatbot poisoning is hard, but not impossible. Combine technical checks with process and testing.

1. Monitor unusual answers

Watch for sudden changes in tone, accuracy, or style. Set alerts for certain sensitive topics.

2. Run regular red-team tests

Have internal or external teams try to jailbreak or trigger strange behavior. Compare to a baseline from earlier tests.

3. Track data lineage

Keep logs of where training, fine-tuning, and RAG data comes from. Unclear or new sources are higher risk.

4. Use anomaly detection on data and outputs

On data: look for unusual spikes, strange formats, or repeated patterns in new documents

On answers: detect abnormal sentiment, toxicity, or topic drift

5. User feedback loops

Make it easy for users and staff to flag “strange” answers. These signals are very valuable for early detection.

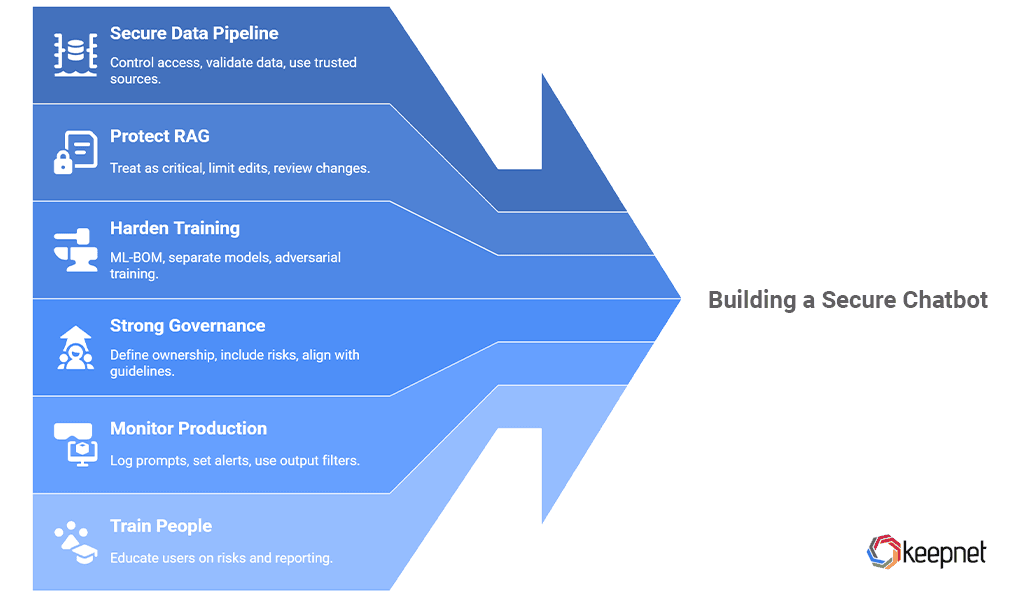

Now the practical part: How do you reduce the risk?

Security agencies and standards bodies suggest a full-lifecycle approach: secure design, development, deployment, and operations.

Here are concrete steps in simple language.

1. Secure Your Data Pipeline

- Control who can add or edit training data

Use access control and the principle of least privilege.

- Validate and clean data

Reject corrupted files and suspicious content. Use filters for malicious links, scripts, or prompt-like strings.

- Use diverse and trusted data sources

Avoid relying only on open, uncontrolled data from the public internet.

2. Protect RAG and Knowledge Bases

- Treat your document store as critical infrastructure.

- Limit who can edit internal wikis, FAQs, and policy docs.

- Require review or approval for changes in “high-impact” content, such as payment rules or compliance texts.

- Re-index and re-scan regularly to detect unexpected or malicious changes.

3. Harden Training and Alignment

- Keep a Machine Learning Bill of Materials (ML-BOM): record sources, versions, and checksums of training data.

- Separate models and fine-tuning sets for different use cases (for example, HR vs. customer support).

- Use adversarial training and robustness techniques to make the model less sensitive to poisoned samples.

4. Build Strong Governance

- Define who owns AI security in your organization.

- Include AI risks (including poisoning) in your risk register.

- Align with frameworks like ENISA, NCSC, and other AI security guidelines that cover threats such as data poisoning.

- Document decisions about tools, suppliers, and model updates.

5. Monitor in Production

- Log prompts, responses, and actions (with good privacy controls).

- Set alerts for suspicious patterns, like repeated trigger phrases or sudden changes in answer style.

- Use output filters for toxicity, personal data, or policy-breaking content. If filters suddenly start catching more issues, investigate.

6. Train People, Not Only Models

Technical controls are not enough. Everyone who touches your chatbot should know:

- What chatbot poisoning is

- Why “just adding a document” is not always safe

- Which data is never allowed in training or RAG

- How to report strange behavior or suspected poisoning

User awareness and clear processes are powerful defenses.

Final Thoughts

Chatbot poisoning turns one of your most helpful tools into a silent insider threat. Attackers do not need to “hack the model” directly. They can poison the data, knowledge, or alignment that shapes how your chatbot thinks.

The good news: once you understand the basics, the path forward is clear:

- Control and validate your data

- Protect RAG sources and internal docs

- Harden training and alignment

- Monitor outputs and listen to your users

- Treat AI security as a continuous process, not a one-time setup

Do this, and your chatbot will stay what it was meant to be: a trusted, helpful assistant for your customers and your team, not a poisoned mouthpiece for attackers.

How Keepnet Helps You Defend Against Chatbot Poisoning

Chatbot poisoning is not only a technical problem; it is also a human and process problem. People decide which data goes into training, which documents are added to RAG, and which prompts or tools the chatbot is allowed to use.

This is exactly where Keepnet’s human-centric approach to security can help.

Keepnet’s Human Risk Management Platform brings together security awareness training, behavioral analytics, and simulation tools so that everyone who interacts with your chatbot ecosystem understands the risks and follows safe practices.

Instead of focusing only on the model, you also strengthen the people and processes around it.

Security Awareness Training for AI & Data Hygiene

With Keepnet’s Security Awareness Training, you can educate employees, content owners, and technical teams on:

- What chatbot poisoning is and why it matters

- Why “just updating the wiki” or “just uploading a CSV” can introduce poisoned data

- How to spot suspicious content, unsafe prompts, and unusual chatbot behavior

- Which data must never be used for training, RAG, or feedback loops

Interactive training and micro-learning modules help non-technical staff understand that AI systems are part of the attack surface.

They learn simple, practical rules: verify sources before adding them to knowledge bases, follow change-management processes, and report strange chatbot answers immediately. Over time, this builds a culture where AI security is everyone’s job, not just the data science team’s responsibility.

Using Phishing Simulations to Prepare for Real-World Social Engineering

Attackers will not only try to poison your chatbot directly. They will also use social engineering to trick employees into changing prompts, uploading “urgent” documents, or relaxing security rules “just this once.”

Organizations use Keepnet’s phishing and social engineering simulation capabilities to safely test how employees respond to these kinds of tactics in 2025. By running realistic campaigns that mimic current online threats, such as fake “AI configuration requests,” malicious document upload invitations, or urgent policy-change emails, you can see:

- Who is likely to accept “new training data” without validation

- Who follows the documented process before changing chatbot settings

- Where extra guidance, approvals, or technical controls are needed

Turning Awareness into Continuous AI Security

When you combine governance, technical controls, and human-focused defenses, chatbot poisoning becomes much harder for attackers to pull off. Keepnet supports this continuous approach by helping you:

- Train people on safe AI and data practices

- Test behavior with social engineering simulations that mirror modern online threats

- Measure progress and identify high-risk areas over time

In short, Keepnet helps you make sure that your chatbot is not only technically hardened, but also surrounded by informed, cautious people and well-designed processes. This way, your AI remains a trusted assistant, not a silent entry point for poisoning and other social engineering attacks.