AI Malware: What It Is, Real Examples, Detection Signals, and Prevention

AI malware is malware enhanced by AI—used to automate decisions, personalize attacks, and change behavior to evade defenses. This guide explains what qualifies as AI malware, real examples from research, detection signals, and prevention steps security teams can apply now.

“AI malware” is no longer just a headline phrase. The practical shift is this: attackers can use AI to reduce effort, increase variation, and speed up decision-making, making campaigns harder to block with simple rules.

At the same time, a lot of content online exaggerates what’s happening. So this guide is built for Google/AI snippets: clear definition, a simple taxonomy, grounded examples, and a prevention checklist—without hype.

What Is AI Malware?

AI malware is malicious software that uses AI systems (including large language models) to automate, accelerate, or adapt parts of the malware lifecycle—such as reconnaissance, choosing next actions, generating scripts, changing behavior to evade detection, or improving social engineering at scale.

A useful rule:

If AI changes “how the malware decides or evolves” (not just how it’s marketed), it belongs in the AI malware category.

AI Malware vs “Normal” Malware

Traditional malware already automates tasks (persistence, theft, encryption). AI changes the economics by adding:

- Faster iteration (more variants, less manual coding)

- More convincing human-layer entry (natural language, multilingual lures)

- Adaptive behavior (environment-aware decisions)

Europol has warned that AI is accelerating cybercrime by making operations cheaper, faster, and harder to detect—especially through automation and improved deception.

Check our blog to learn more about Malware and common forms used.

A Simple Taxonomy: 3 Levels of AI Malware (Snippet-Friendly)

Level 1 — AI-Assisted Malware (Most common today)

AI is used around the malware:

- generating phishing text, chat scripts, impersonation messages

- translating and personalizing lures at scale

- summarizing stolen data for extortion leverage

This is already aligned with law-enforcement reporting on AI-enabled scams and impersonation capabilities

Level 2 — AI-Augmented Malware Operations

- AI helps with operational decisions:

- selecting high-value targets or machines

- choosing next steps based on system context

- adapting messaging in real time during social engineering

Level 3 — AI-Embedded / AI-Orchestrated Malware (Emerging, proven in research)

AI is used inside the malicious workflow to generate or adapt components dynamically.

A notable example is ESET’s reporting on “PromptLock,” described as the first known AI-powered ransomware proof-of-concept, where AI-generated scripts drive parts of behavior (discovery, exfiltration, encryption workflow).

(You don’t need to be “ransomware” to be AI malware—PromptLock simply demonstrates the direction.)

Real Examples of “AI Malware” (What’s Documented)

Example 1: PromptLock (ESET) — AI-powered malware proof-of-concept

ESET documented PromptLock as a proof-of-concept that uses AI to generate malicious scripts and lower the barrier to sophisticated attacks.

Defender takeaway: expect more tooling where behavior changes fast, so static indicators lose value sooner.

Example 2: LLM-guided post-breach automation (research systems)

Research like AUTOATTACKER explores using LLM-guided systems to implement post-breach, hands-on-keyboard style actions across techniques—highlighting how AI could help automate steps attackers historically did manually.

Defender takeaway: detection should emphasize behavioral correlations (identity + endpoint + network), not single-event alerts.

Example 3: LLM-based malware code generation (academic literature)

Recent academic work discusses using LLMs to generate malware source code (as research), showing feasibility from an attacker-perspective framing.

Defender takeaway: more malware “variants” and faster mutation pressure on detection systems.

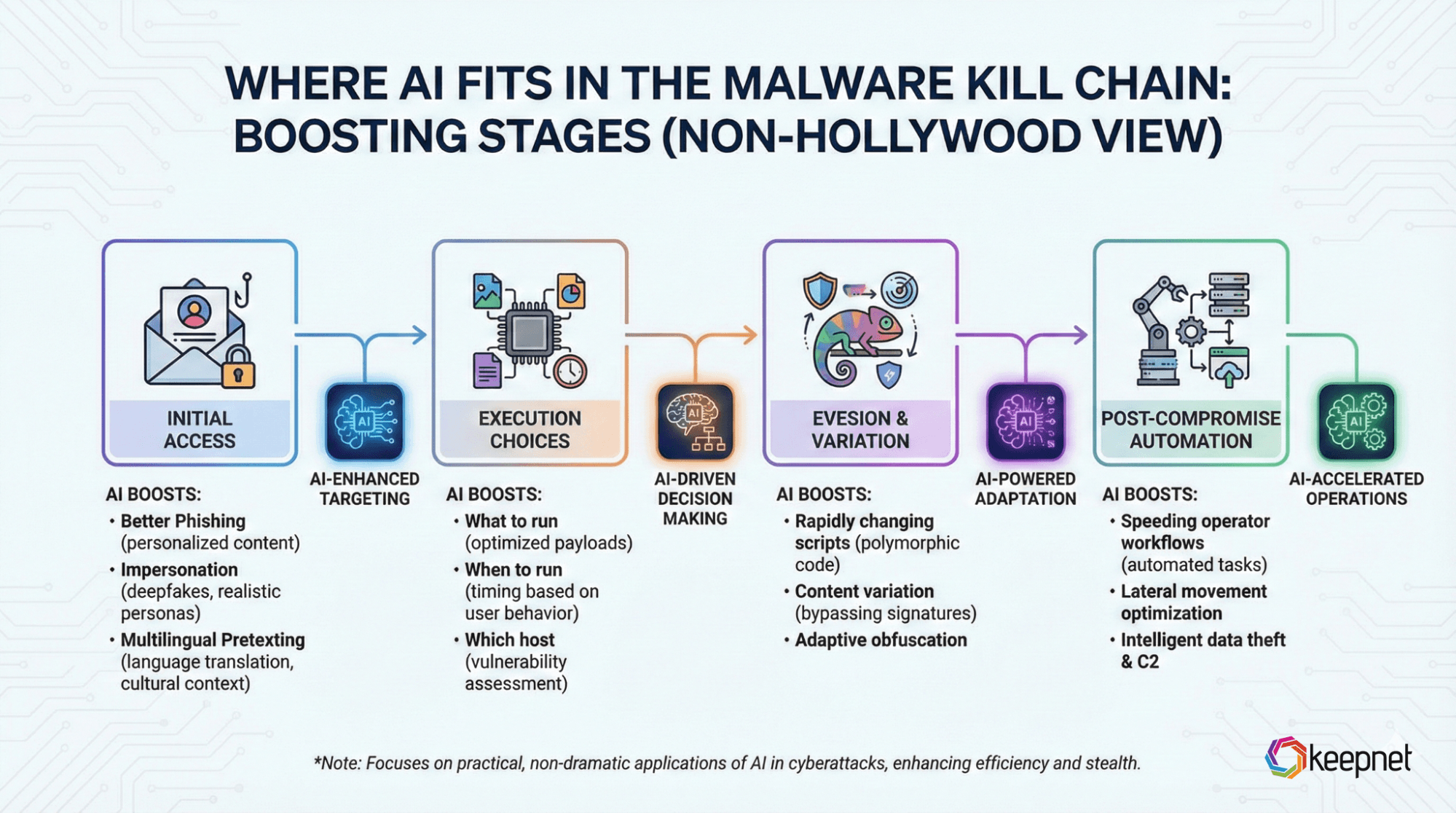

Where AI Fits in the Malware Kill Chain

AI most often boosts these stages:

- Initial access (better phishing, impersonation, multilingual pretexting)

- Execution choices (what to run, when, and on which host)

- Evasion & variation (rapidly changing scripts/content)

- Post-compromise automation (speeding operator workflows)

Detection: The Signals SOC Teams Should Watch

AI malware pushes defenders toward behavior-first detection. Useful signals include:

1) Identity anomalies (often the first “real” indicator)

- abnormal sign-ins, token/session anomalies, new MFA methods

- impossible travel, unusual devices, unusual OAuth consent

2) Endpoint behavior that looks like automation

- rapid discovery commands, repeated system inventory patterns

- sudden credential access attempts, browser data reads

- unusual script execution patterns across multiple endpoints

3) Network signals: “new + weird + repetitive”

- rare outbound destinations (especially shortly after initial access)

- beacon-like traffic patterns

- unexpected data staging/exfil paths

4) Content volatility

If your detections rely heavily on static strings or known hashes, AI-driven variation can increase bypass probability. (Your controls should still keep signatures—just don’t depend on them.)

Prevention: The AI Malware Defense Stack (Practical Checklist)

For organizations

- Harden identity: phishing-resistant MFA for privileged access; protect MFA enrollment

- Reduce blast radius: least privilege, remove standing admin, segment critical assets

- Constrain execution: application control, script restrictions, macro controls, sandboxing

- Modern EDR + telemetry correlation: endpoint + identity + email/web signals in one view

- Backup + restore discipline: immutable backups and regular restore tests

- Playbooks + tabletop exercises: include “AI-accelerated” scenarios (faster pivoting, higher variation)

For employees (keep it simple)

- Don’t trust urgency. Verify sensitive requests out-of-band.

- Don’t install “updates,” “viewers,” or “security tools” from messages.

- Report suspicious messages immediately—early reporting is a kill-switch.

Conclusion

AI malware doesn’t have to be “fully autonomous” to be dangerous. If AI makes attacks faster, more personalized, and more variable, your advantage comes from layered controls, measurable human-risk reduction, and recovery readiness.

If you’re building a measurable human-risk program, Security Awareness Training software, and Phishing Simulator help teams train against modern, AI-amplified social engineering paths that often precede malware incidents. Keepnet has been named a go-to vendor for stopping deepfake and AI disinformation attacks by Gartner for consecutive years.