What Is Deepfake Phishing Simulation? A Complete Guide to Protecting Against AI-Driven Attacks

Deepfake phishing simulation is a security awareness exercise that uses AI-generated voice, video, and avatars to impersonate real people in realistic (but safe) phishing scenarios. It helps organizations train employees to verify urgent requests and stop AI-driven social engineering before it causes fraud or data loss.

Imagine receiving a video call from your CEO, urgently requesting a fund transfer. The voice, face, and mannerisms appear genuine. You comply—only to discover it was a deepfake.

The financial impact is also significant—in just Q1 2025, Resemble AI’s report documents financial damages exceeding $200 million from major deepfake incidents worldwide, underlining the critical need for robust training and awareness programs. (Source: Resemble AI)

The FBI/IC3 has also warned about ongoing malicious text and voice messaging campaigns that impersonate trusted figures—reinforcing why out-of-band verification and link/credential caution must be mandatory. (Source: IC3)

As generative AI becomes more accessible, cybercriminals increasingly use deepfakes to create convincing scams, making traditional phishing simulations outdated. Deepfake phishing simulation emerges as a cutting-edge training method, equipping organizations to recognize and respond to these advanced threats.

In this blog post, we’ll explore how deepfake phishing simulations work, why they are essential, and how organizations can implement them effectively.

Free Deepfake Phishing Simulation

Try one-time, zero-cost deepfake simulation

Deepfake Phishing Simulation Explained

Deepfake phishing simulation is a security training method that uses AI-generated voice, video, and image manipulation to mimic real-world phishing attacks. Instead of only sending fake emails, these simulations replicate how attackers clone an executive’s voice on a phone call or face in a video meeting to trick employees into taking harmful actions—like wiring money or sharing sensitive data.

By running deepfake phishing simulations in a safe, controlled environment, organizations can:

- Teach employees how to spot deepfake cues (unnatural speech patterns, video glitches, suspicious requests).

- Test whether internal processes (like financial approval workflows) can withstand AI-powered fraud.

- Provide instant feedback and micro-training to strengthen defenses after each simulated attack.

Keepnet resources to explore next:

- Deepfake Statistics & Trends 2026 (data + trends)

- What is Deepfake Phishing? (plain definition + examples)

- Deepfakes: How to Spot Them (practical red flags)

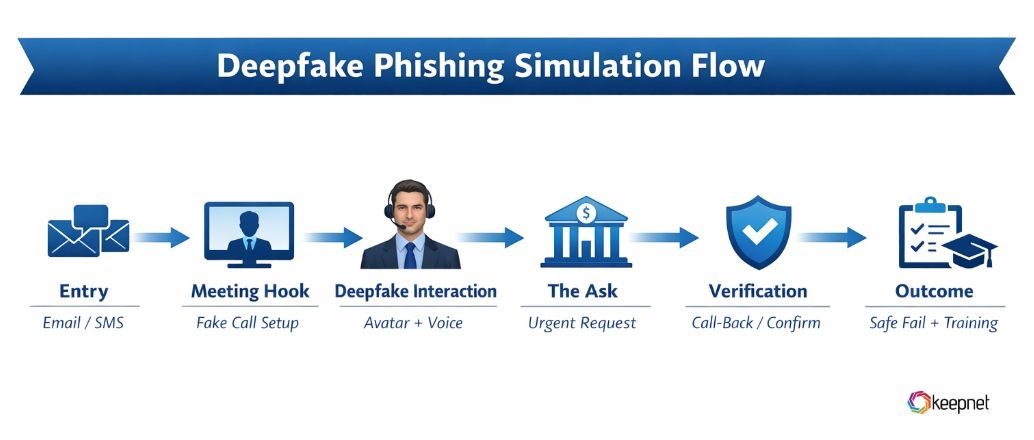

How Deepfake Phishing Simulation Works (Step by Step)

Deepfake phishing simulations are designed to mirror the exact tactics cybercriminals use when exploiting AI-generated voices, videos, or images. By breaking the process into clear stages, organizations can understand how these attacks unfold, safely test employee responses, and strengthen defenses before a real incident occurs.

Intelligence & Persona Selection

Your deepfake test team selects a target persona (C-suite executive, finance lead, HR head, etc.) and gathers OSINT (public data, social media signals, and communication patterns) to craft a believable context.

Content Generation

Your team uses voice cloning or video avatar tools to create a simulated request (e.g., “We need you to approve a wire,” “Sign this document now,” etc.). In some cases, the script is delivered inside a simulated video meeting environment (Zoom/Teams-style).

Because many deepfake scams start as voice-first impersonation, we recommend reading: What is Vishing (Definition, Detection & Protection).

Attack Delivery & Engagement Channels

The deepfake simulation may begin with email and then escalate to a video call, SMS, or voice call. The goal is to mirror real attacker escalation paths—safely.

To understand how attackers escalate across channels, read our guide: Vishing vs. Phishing vs. Smishing. And for QR-based escalation tricks, see: What is Quishing (QR Phishing)?

Safe Fail + Micro-training

If an employee clicks, speaks, or enters data, the simulation ends safely (no real harm). Immediately, a micro-training message appears to highlight the warning signs and deliver a short learning moment.

Reporting & Analytics

Your deepfake phishing simulation platform tracks results such as pass/fail rates, the most convincing personas and channels, process failure points, remediation success, and trends over time.

Why Organizations Need Deepfake Phishing Tests

As deepfake attacks become more convincing, traditional security training falls short. Deepfake phishing simulations help organizations prepare by exposing employees to realistic scenarios, teaching them to spot fake audio and video, and reinforcing critical thinking when handling unexpected requests.

The sophistication of deepfake phishing attacks makes them particularly dangerous. Advanced AI tools can flawlessly mimic voices, facial expressions, and gestures, making it nearly impossible to distinguish fake from real without proper training. This complexity demands a proactive approach to cybersecurity.

By practicing in a controlled environment, employees become better equipped to recognize and respond to deepfake threats, reducing the risk of costly security breaches. The financial impact is also significant—in just the first quarter of 2025, businesses around the globe faced over $200 million in losses from deepfake-enabled fraud, underlining the critical need for robust training and awareness programs. (Source).

Check out our blog post on deepfake phishing statistics and trends.

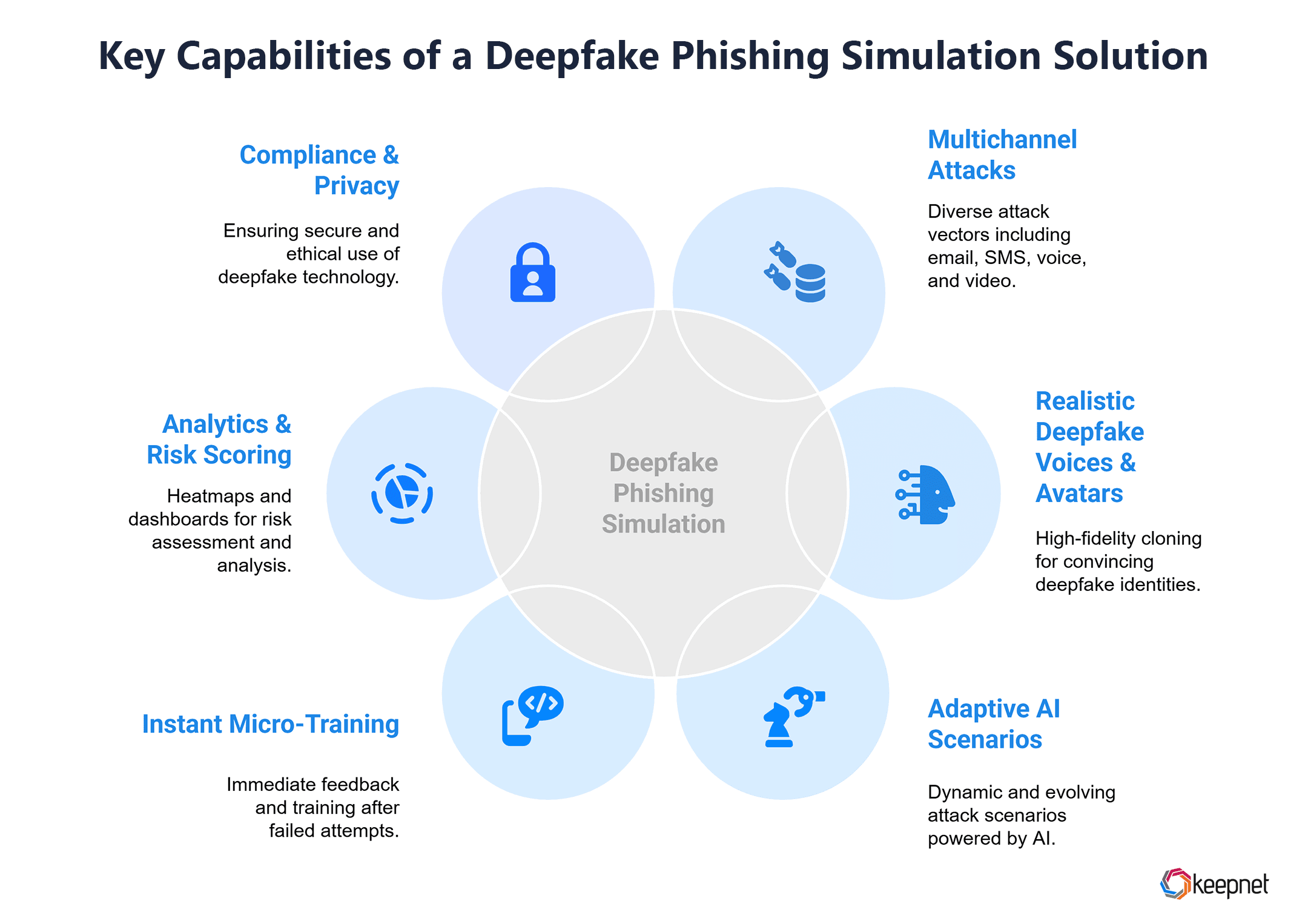

Key Capabilities You Should Expect from a Deepfake Phishing Simulation Tool

As deepfake attacks grow more sophisticated, organizations need simulation tools that go beyond email alone. The following key capabilities ensure your deepfake phishing simulations are realistic, effective, and build true resilience against AI-driven threats.

| Capability | Description |

|---|---|

| Multichannel Attacks | Email, SMS, Voice, Video |

| Realistic Deepfake Voices & Avatars | High Fidelity Cloning |

| Adaptive AI Scenarios | Dynamic, Evolving Attacks |

| Instant Micro-Training | Feedback Right After Failure |

| Analytics & Risk Scoring | Heatmaps, Dashboards |

| Compliance & Privacy | Secure & Ethical Use |

Table 1: Key Capabilities You Should Expect from a Deepfake Phishing Simulation Solution

Benefits of Deepfake Phishing Simulations

Deepfake phishing simulations provide organizations with practical ways to strengthen cybersecurity. Here’s what your organization can gain:

- Increased Employee Awareness: Train staff to recognize deepfake videos, audio, and messages, making them less likely to fall for scams.

- Improved Response Skills: Equip employees to handle suspicious requests with caution and critical thinking.

- Reduced Financial Risk: Minimize the chances of falling victim to costly deepfake scams and data breaches.

- Regulatory Compliance: Meet industry standards for cybersecurity training with advanced simulation techniques.

- Reputation Protection: Proactively addressing threats helps maintain your organization’s integrity and customer trust.

By regularly running deepfake phishing simulations, organizations can build strong cybersecurity habits, fostering a vigilant and security-aware culture.

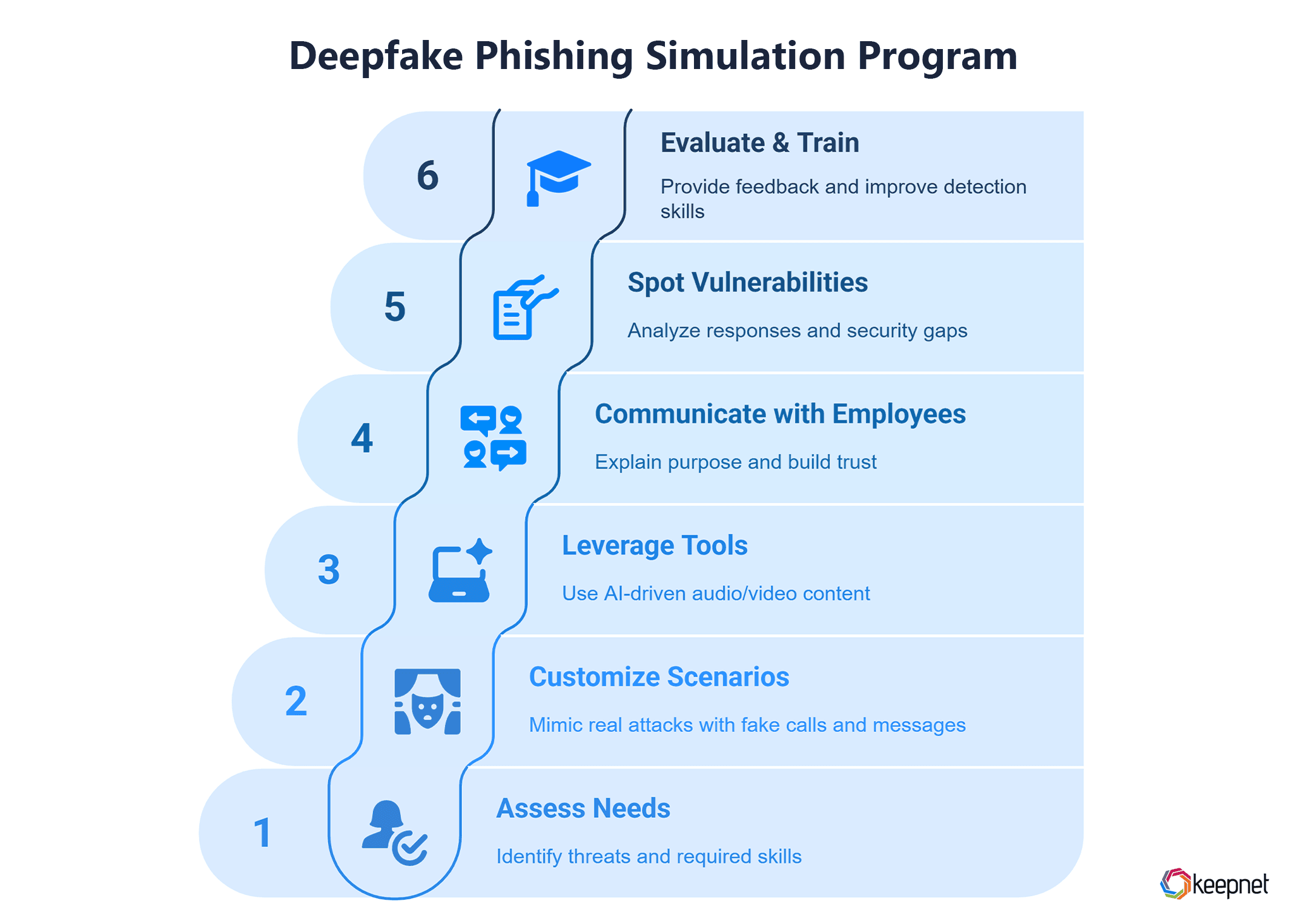

How to Set Up an Effective Deepfake Phishing Simulation Program

Implementing a deepfake phishing simulation requires careful planning to make it realistic, ethical, and effective. Here’s how to do it:

- Assess Your Needs: Identify potential threats specific to your industry and determine which skills employees need to develop.

- Create Realistic and Customized Scenarios: Design simulations that closely mimic real-life deepfake attacks, such as fake video calls or voice messages from executives. Customize these scenarios to reflect your organization's structure and communication patterns for maximum relevance.

- Leverage Advanced Tools: Use AI-driven platforms to produce convincing audio and video content, making the simulations more credible.

- Communicate with Employees: Clearly explain the purpose of the simulation to reduce stress and build trust.

- Spot Vulnerabilities: Use the simulation results to identify weak points in employee responses and security protocols.

- Evaluate and Train: After the simulation, analyze outcomes, provide feedback, and offer training to improve detection skills.

For more insights into creating effective phishing simulations using scientific frameworks and behavioral tactics, read the Keepnet article: The Science Behind Phishing Simulations: How Scientific Frameworks and Behavioral Tactics Train Your Team.

How to Choose the Right Deepfake Phishing Test Vendor

Selecting a deepfake phishing simulation vendor is one of the most critical steps in building resilience against AI-powered social engineering attacks. The right partner should provide realistic deepfake voice and video simulations, advanced reporting, and secure handling of sensitive data.

Deepfake Phishing Vendor Evaluation Checklist:

- Voice + Video Deepfake Support – Ensure the platform can generate both cloned voices and realistic video avatars.

- Secure Persona Integration – Can the solution safely use your company’s personas without risking privacy or data exposure?

- Multichannel Phishing Coverage – Look for simulations that span email, SMS, voice, and video.

- Adaptive AI Logic – Does the system evolve dynamically to replicate real attacker behavior?

- Analytics & Reporting Dashboards – Comprehensive metrics, risk scoring, and performance tracking are must-haves.

- Compliance & Privacy Safeguards – Consent management, opt-outs, and strong data governance should be built in.

- Case Studies & References – Proven results with organizations similar to yours strengthen credibility.

- Pricing Transparency – Understand if costs are per user, per campaign, or subscription-based.

Implementation Roadmap — Launching Your Deepfake Phishing Program

Rolling out a deepfake phishing simulation program requires structure and gradual adoption. A phased approach ensures employees adapt while processes are stress-tested.

Step-by-Step Roadmap:

- Pilot Program – Start with a small user group to validate realism and address usability or compliance concerns.

- Phased Rollout – Expand across departments, focusing first on high-risk roles (finance, HR, IT help desk).

- Feedback & Refinement – Collect user insights and adjust templates, voice clones, or scenarios.

- Regular Testing Cadence – Schedule simulations quarterly or biannually to keep vigilance high.

- Iterative Learning – Use lessons learned to scale scenario complexity and improve built-in micro-training.

Sample Deepfake Scenarios & Use Cases of Deepfake Phishing Test

Deepfake simulations work best when they mirror real-world phishing threats. Here are practical examples:

- Deepfake CEO Voice Call Scam – An executive’s cloned voice requests an urgent wire transfer.

- Video Meeting Avatar Attack – A deepfake avatar in a simulated Zoom/Teams call pressures employees to approve actions.

- SMS + Video Combo Attack – A fake SMS instructs the target to join a video call with a deepfake impersonator.

- HR / Onboarding Deepfake Attack – A “fake HR director” requests personal documents from new hires.

If you want real-world voice pretexts to adapt into scenarios, use: 10 Vishing Examples & How to Prevent Them.

Detection & Mitigation — Best Practices Against Deepfake Phishing

While deepfake phishing simulations build awareness, organizations must also strengthen defenses with layered strategies.

Best Practices:

- Out-of-Band Verification – Always confirm urgent requests through a separate, trusted communication channel.

- Behavioral & Linguistic Cues – Train employees to spot odd timing, lip sync issues, or unnatural speech patterns.

- Deepfake Detection Tools – Leverage forensic AI and detection solutions to analyze suspect media.

- User Reporting Channels – Provide a simple “Report Suspicious Activity” button for employees.

- Incident Response Playbooks – Define clear escalation paths for suspected deepfake phishing incidents.

Deepfake pressure often pairs with MFA push-spam and “approve now” tricks—see: What is MFA Fatigue Attack and How to Prevent It.

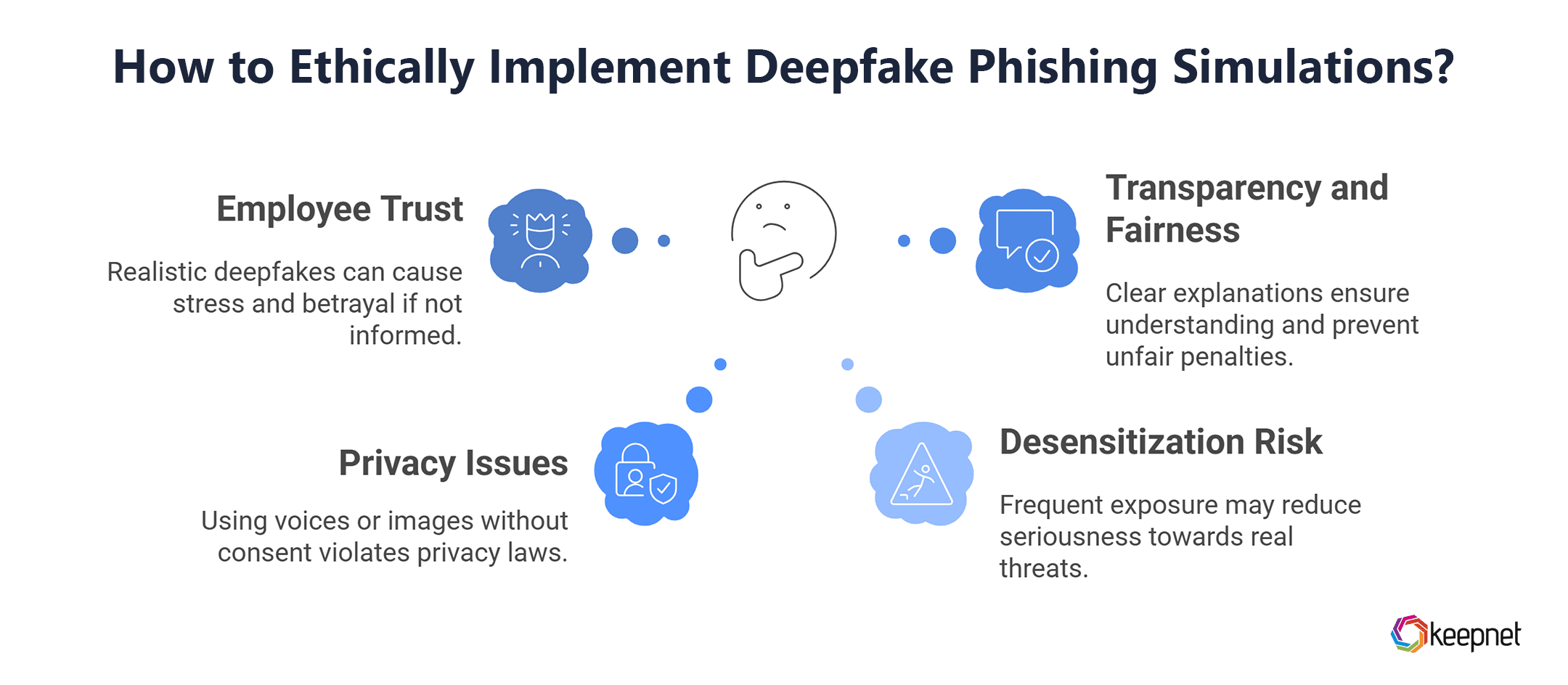

The Ethical Dilemmas of Deepfake Phishing Simulations

Deepfake phishing simulations can be highly effective, but they also raise ethical concerns that organizations must address:

- Employee Trust: Using realistic deepfakes may cause stress or feelings of betrayal if employees are not informed beforehand.

- Privacy Issues: Creating phishing simulations using employees’ voices or images without consent can violate privacy laws.

- Desensitization Risk: Frequent exposure to deepfakes might make employees less likely to take real threats seriously.

- Transparency and Fairness: Simulations should be clearly explained as part of training, ensuring that employees understand their purpose and are not unfairly penalized.

To balance training effectiveness and ethics, organizations should clearly communicate the goals of simulations, respect employee privacy, and ensure that scenarios are relevant without causing unnecessary distress.

Keepnet Deepfake Phishing Simulation: Combatting AI Driven Threats

Keepnet's Deepfake phishing simulation tool helps organizations stay ahead of evolving cyber threats by creating realistic, adaptive phishing campaigns. It mirrors the latest social engineering attacks, identifies risky user behavior, and triggers instant micro-training, building a resilient workforce with each simulation.

Key Features:

- Extensive Template Library: Access over 6,000 phishing campaign templates to simulate realistic attacks and keep training engaging.

- Multi-Channel Phishing Simulation: Utilize phishing techniques like SMS, Voice, QR code, MFA, and Callback phishing to cover various social engineering risks.

- Global and Local Reach: Deliver phishing attack simulations across time zones in a single campaign, with support for over 120 languages to ensure local relevance.

- Customizable Content: Personalize phishing emails and landing pages using 80+ merge tags, making simulations more targeted and impactful.

- Instant Micro-Training: Automatically deliver quick training when risky behavior is detected, reinforcing learning at the moment of error.

By leveraging Keepnet’s phishing simulation software, organizations can continuously enhance their employees’ awareness and response to deepfake and other phishing threats, building strong cybersecurity habits over time.

Further Reading

If you want to go deeper into AI-driven social engineering, these guides will help you build stronger defenses and run safer simulations:

- Deepfake Statistics & Trends 2025

- What is Deepfake Phishing?

- Deepfakes: How to Spot Them and Stay Protected

- How Deepfakes Threaten Your Business (Examples & Types)

- What is Vishing? (Definition, Detection & Protection)

- 10 Vishing Examples & How to Prevent Them

- What is MFA Fatigue Attack and How to Prevent It

- What is Quishing (QR Phishing)?

Editor’s note (Updated on January 7, 2026): This article has been reviewed and updated to include the latest deepfake phishing trends, real-world cases, and practical verification controls for modern organizations