What is Shadow AI?

Shadow AI use is rising fast—and so are the risks. This blog explains what Shadow AI is, why employees use it, and how IT leaders can detect and govern it effectively.

Shadow AI is the use of any AI tool or application inside a company without approval, monitoring, or support from IT or security teams.

This can include:

- Public generative AI tools like ChatGPT, Gemini, or Claude

- Image tools like DALL·E or Midjourney

- AI copilots for coding, writing, or analysis

- Browser extensions or small AI apps employees install themselves

In 2025, a growing body of research shows that unauthorized use of AI in the workplace is no longer rare, it’s common. For example, a recent survey found that 98% of organizations have employees using unsanctioned apps, including shadow AI, exposing companies to vulnerabilities. (Source)

Meanwhile, another study suggests that Shadow AI incidents account for 20% of all data breaches, with an average cost premium of $670,000 compared to standard breaches. (Source)

Employees use these tools to write emails, summarize documents, translate text, draft code, or prepare presentations. The problem is not AI itself. The problem is AI in the shadows – outside company control.

In this blog, you’ll learn what shadow AI is, why it happens, what dangers it creates, and how your organization can manage it in a smart and practical way.

What exactly is Shadow AI?

Shadow AI is when employees, without telling IT or security, use public or consumer-grade AI tools (for example, chatbots like ChatGPT, image tools like DALL·E or Midjourney, coding assistants, or browser extensions) to handle work-related tasks: drafting emails, summarizing documents, writing code, preparing presentations, translating content, and more.

The “shadow” part comes from the fact that these tools are used outside the control, visibility, or approval of IT, compliance, or security teams.

Because AI tools often process potentially sensitive data — company emails, internal documents, financials, source code — the risk is greater than with “ordinary” unsanctioned apps.

Shadow AI isn’t just a matter of convenience or productivity — it’s a governance, security, privacy, and compliance problem.

Shadow AI vs. Shadow IT

Shadow AI is related to shadow IT, but not the same:

- Shadow IT: Any technology (apps, cloud services, devices) used without IT approval.

- Shadow AI: Specifically AI tools and models used without oversight, often relying on external data and complex decision-making.

Because AI tools learn from and process data, the risk is usually higher than with a simple unsanctioned app.

Check out our article on Shadow IT to dive deeper how it is different from Shadow AI.

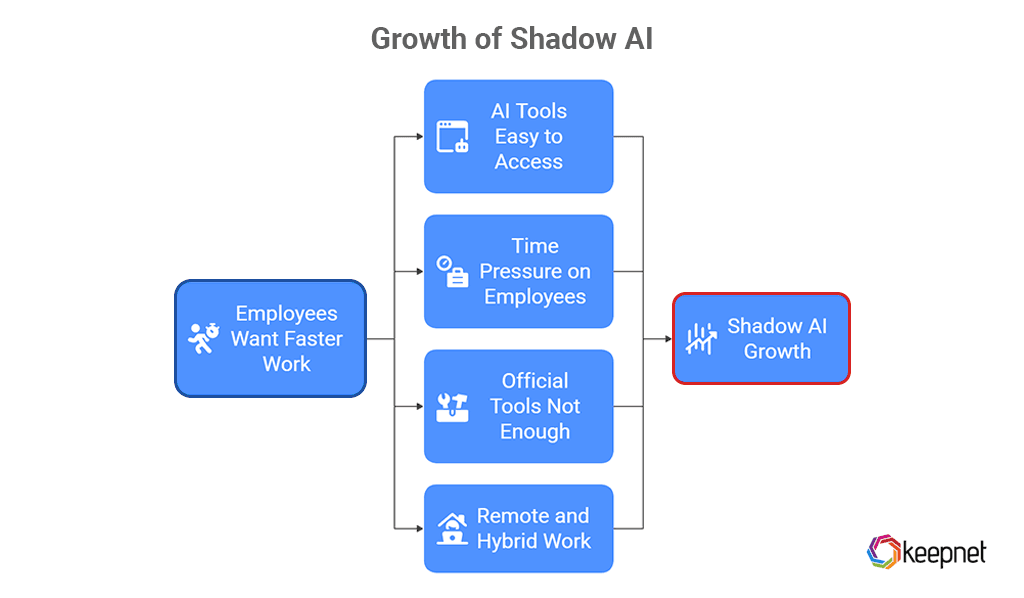

Why Is Shadow AI Growing So Fast?

Shadow AI is not happening because employees are evil. It usually happens because they want to get work done faster.

Several trends push shadow AI forward:

AI tools are easy to access

Most tools run in the browser. No installation is needed. Anyone can sign up in seconds.

Employees are under time pressure

People feel pressure to produce more in less time. AI looks like a quick way to write, code, or analyze.

Official tools are not enough

Many organizations still do not provide approved AI solutions or clear policies. So employees search the web and pick whatever works for them.

Remote and hybrid work

Outside the office, people feel more free to experiment with tools the company does not know about.

Shadow AI Statistics

Shadow AI is expanding inside enterprises faster than most security teams can track or control. Unmonitored AI tools introduce serious risks, from accidental data exposure to compromised intellectual property. The following Shadow AI statistics reveal the true scale of the Shadow AI problem.

- 98% of organizations have employees using unsanctioned apps, including shadow AI, exposing companies to vulnerabilities. (Source)

- 76% of businesses now have active Bring Your Own AI (BYOAI) use within their workforce due to overlapping unsanctioned AI adoption. (Source)

- In organizations with high shadow AI levels, security breaches compromised 65% personally identifiable information (PII) and 40% intellectual property (IP). (Source)

- 97% of AI-related breaches lacked proper AI access controls, highlighting a major security gap. (Source)

- 63% of organizations lack AI governance policies, increasing risks from unmonitored AI use. (Source)

- Shadow AI incidents account for 20% of all data breaches, with an average cost premium of $670,000 compared to standard breaches. (Source)

- 37% of staff use shadow AI in 2025, posing a significant corporate security threat. (Source)

- OpenAI services represent 53% of all shadow AI usage in studied organizations, processing data from over 10,000 enterprise users. (Source)

- 57% of employees hide their AI use at work, indicating a lack of enterprise AI planning. (Source)

- AI-associated data breaches cost organizations more than $650,000 on average, per IBM's 2025 report. (Source)

- 78% of AI users bring their own AI tools to work, according to Microsoft and LinkedIn's 2024 Work Trend Index. (Source)

- More than 80% of workers, including 90% of security professionals, use unapproved AI tools. (Source)

- 71% of office workers admit to using AI tools without IT approval. (Source)

- Nearly 20% of businesses have experienced data breaches due to unauthorized AI use. (Source)

- 60% of users still rely on personal, unmanaged SaaS AI apps for shadow AI activities. (Source)

- 89% of organizations actively use at least one SaaS generative AI app. (Source)

- There was a 50% increase in people interacting with AI apps in organizations over the past three months (as of mid-2025). (Source)

- 68% surge in shadow generative AI usage in enterprises, based on 2025 telemetry data. (Source)

- 68% of employees use free-tier AI tools like ChatGPT via personal accounts. (Source)

- 57% of employees using free-tier AI tools input sensitive company data.(Source)

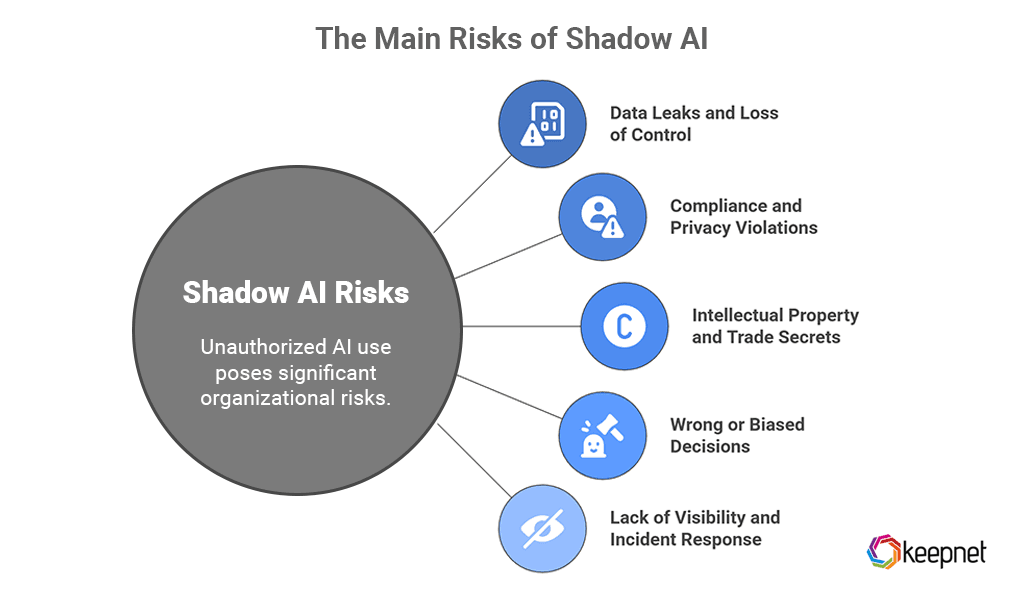

The Main Risks of Shadow AI

Shadow AI can feel harmless: you paste some text, get a nice answer, and move on. But for the organization, the risks add up quickly.

1. Data leaks and loss of control

When employees paste:

- customer data

- internal emails

- financial reports

- source code

into a public AI tool, they may expose confidential information to third parties.

In some cases, that data might be stored, logged, or used to further train the AI model. The company then loses control and may not be able to delete it.

2. Compliance and privacy violations

Many laws and regulations (GDPR, HIPAA, industry-specific rules) have strict rules about:

- where personal data can be stored

- how it can be processed

- who can access it

If employees feed personal or regulated data into tools not assessed or approved by the organization, the company may break the law without even noticing.

This can lead to fines, legal action, and serious reputational damage.

3. Intellectual property and trade secrets

Source code, product designs, strategy documents, and research are all highly valuable. If these are shared with external AI tools, there is a risk of:

- loss of trade secrets

- competitors learning from leaked information

- future disputes about ownership of generated content

Companies with high levels of shadow AI already see higher data breach costs on average than those with minimal unauthorized AI use.

4. Wrong or biased decisions

AI can be helpful, but it can also:

- hallucinate or invent facts

- copy outdated or biased patterns from its training data

- misinterpret context

If employees rely on shadow AI for financial analysis, HR decisions, or security settings, the results can be incorrect, unfair, or risky.

5. Lack of visibility and incident response

Security teams cannot protect what they cannot see. With shadow AI:

- they do not know which tools are in use

- they cannot track what data leaves the network

- they struggle to investigate incidents

If there is a breach, it becomes very hard to answer simple questions like “What data was exposed?” or “Who used which tool when?”

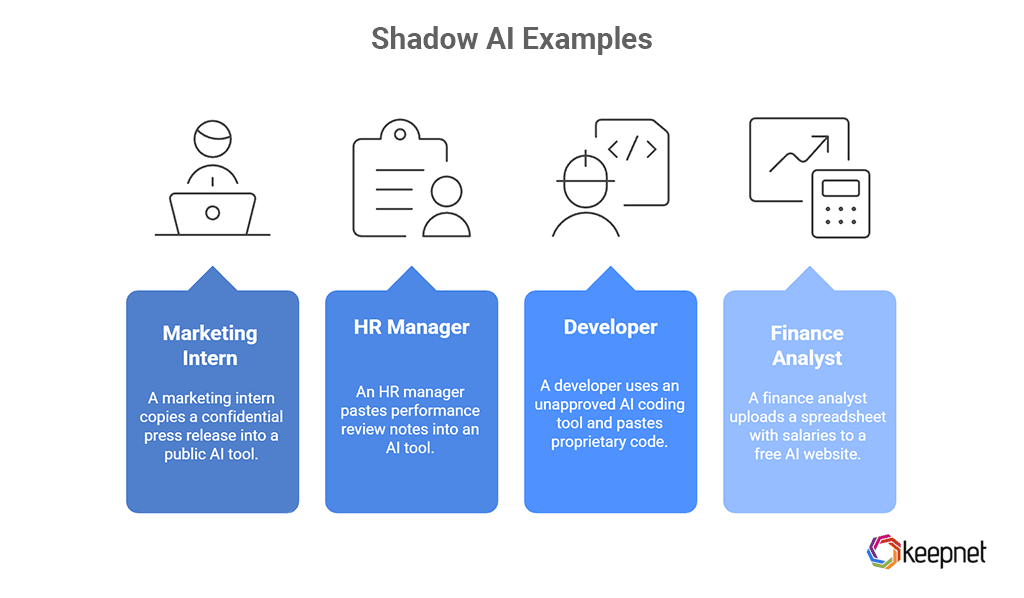

Examples of Shadow AI

Shadow AI is not a theory. Here are some everyday examples:

Marketing intern and a public chatbot

A marketing intern copies a full draft of a confidential press release, including unreleased product details, into a public AI tool to “improve the tone.” The content leaves the company boundary.

HR manager and performance reviews

An HR manager pastes performance review notes, including names and sensitive comments, into an AI tool to generate polished feedback.

Developer and AI coding assistant

A developer uses an unapproved AI coding tool, pastes proprietary code, and later some of that code appears in a public project suggested to another user.

Finance analyst and budget summaries

A finance analyst uploads an entire spreadsheet with salaries and cost projections to a free AI website to generate charts and summaries.

Each of these actions saves time. Each also creates a potential security, privacy, or compliance incident.

How Big Is the Shadow AI Problem?

Analysts expect shadow AI to keep growing. Gartner predicts that by 2030, around 40% of enterprises will face security or compliance incidents related to shadow AI.

At the same time, surveys show that:

- Most employees have tried unapproved AI tools

- Senior leaders are often more likely than junior staff to use them

- Only a minority of organizations have clear and enforced AI usage policies

The message is clear: this is not a niche or future problem. It is already happening in most organizations.

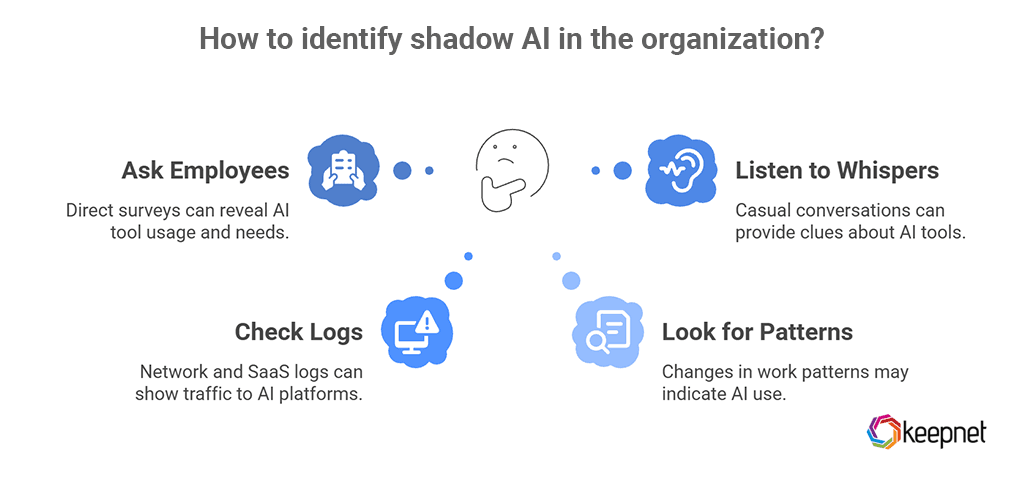

How to Spot Shadow AI in Your Organization

You cannot manage shadow AI if you do not know it exists. Here are some simple ways to start:

- Ask employees directly: Run anonymous surveys. Ask which AI tools they use, what for, and why.

- Check network and SaaS logs: Security and IT teams can review traffic to known AI platforms and browser extensions.

- Look for AI-shaped work patterns: Suddenly faster content creation, similar writing style across teams, or unusual automation may hint at AI use.

- Listen to “whispers”: People often talk about “this cool AI I found” or “I used a bot to do this.” Take those comments seriously.

The goal here is not to punish people. The goal is to understand real needs and bring AI use into the light.

How to Manage Shadow AI Safely

Shadow AI will not disappear. Instead of banning AI, organizations should guide its use.

Here is a step-by-step approach.

1. Create a clear and simple AI policy

Write a policy in plain language that explains:

- Which AI tools are allowed

- Which use cases are allowed or forbidden

- What types of data must never be entered into public AI tools

- Who to ask for help or approval

Keep it short and practical. People should be able to read and apply it in a few minutes.

2. Offer safe, approved AI tools

If you only say “no,” employees will keep using shadow AI in secret. Instead:

- Provide secure, enterprise-grade AI tools

- Integrate them into everyday workflows (email, documents, coding, CRM)

- Explain why these tools are safer (data protection, logging, access control)

When employees have good, safe tools, they are less likely to search for risky ones.

3. Train and educate everyone

Education is one of the most powerful controls. Make sure staff understand:

- What shadow AI is

- Why data privacy and compliance matter

- What can go wrong when using public AI tools

- How to use company-approved AI safely

Analysts and professional bodies agree that training and awareness are key to reducing shadow AI incidents.

4. Build AI governance and oversight

Treat AI like any other powerful technology:

- Include AI in risk assessments and vendor reviews

- Involve legal, security, HR, and business leaders

- Set up processes to approve new AI tools

- Monitor usage and adjust policies as the technology evolves

Good governance does not kill innovation. It gives structure so innovation can be safe and sustainable.

5. Encourage a “speak up” culture

Employees must feel safe to say:

- “I have been using this AI tool. Can we make it official?”

- “I am not sure if this is okay. Can someone check?”

If people fear punishment, shadow AI will stay hidden. If they feel supported, they will help you improve.

Editor’s note: This article was updated on December 4, 2025.