Agentic AI in Cybersecurity: The Next Frontier for Human-Centric Defense

Agentic AI is reshaping cybersecurity, enabling real-time threat detection, phishing prevention, and automated risk mitigation. Learn how Keepnet integrates AI into security awareness, incident response, and compliance to stay ahead of evolving cyber threats.

Adaptive security training software helps organizations reduce human risk by personalizing security awareness training based on employee role, behavior, and real-time signals. As AI-enabled social engineering becomes more targeted and scalable, generic “one-size-fits-all” training leaves gaps.

Keepnet’s adaptive security awareness training automatically adjusts learning paths using AI-driven personalization—delivering just-in-time microlearning after risky actions, reinforcing positive behavior with nudges, and increasing engagement through gamification.

In this guide, you’ll learn what adaptive training means, how it works step-by-step, what to look for in a platform, and how Keepnet helps teams build measurable security habits.

What is Agentic AI in Cybersecurity? Definition and Context

Agentic AI is an advanced type of artificial intelligence that can detect, respond to, and prevent cyber threats without human intervention. Unlike traditional security tools that rely on predefined rules, Agentic AI development continuously learns and adapts to evolving threats.

In cybersecurity, it is used to:

- Identify phishing attacks and malware in real time.

- Automatically respond to security incidents by blocking threats.

- Predict and prevent cyberattacks before they occur.

However, cybercriminals are also exploiting Agentic AI. If the underlying AI model is compromised, attackers can automate large-scale phishing and social engineering attacks without detection.

At Keepnet, we integrate Agentic AI into our Human Risk Management solutions to stay ahead of evolving threats and enhance automated threat detection and response.

Agentic AI vs Generative AI vs SOAR: What’s the Difference?

Many readers mix up agentic AI, generative AI, and SOAR automation—but they solve different problems. Generative AI (GenAI) is excellent at creating content and assisting humans (like drafts, summaries, and answers), but it usually doesn’t take independent action. SOAR and rule-based automation can execute workflows, but they typically depend on predefined playbooks (“if X happens, then do Y”). Agentic AI is different: it has a goal (like reduce phishing risk), it can choose the next step, use tools, and adjust strategy when the situation changes. In other words: GenAI is a smart assistant, SOAR is a scripted workflow engine, and agentic security AI is the one that can investigate + decide + act.

Why this matters for modern cyber defense

This difference matters because today’s threats change fast. A static playbook may stop yesterday’s email phishing, but it might struggle against AI-generated spear phishing, multi-channel scams, or deepfake impersonation. Agentic AI can connect signals across systems, then adapt its response—especially when you combine it with human risk management, training, and reporting workflows.

Why Agentic AI Is the New Minimum Standard for Cyber Defense

To understand why we are moving toward Agentic AI in cybersecurity, it helps to stop thinking of "AI" as a single piece of software and start thinking of it as the difference between a searchlight and a security guard.

Traditional AI is like a powerful searchlight: it can spot a "break-in" if it’s pointed at the right spot, but it can’t chase the intruder, lock the doors, or figure out how they got over the fence. Agentic AI is the security guard—it has a goal (keep the building safe), it can move around, it can use tools, and it makes decisions in the moment without waiting for someone to tell it what to do next.

Here is why this shift is becoming a "must-have" rather than a "nice-to-have."

1. The End of the "Human Speed" Era

In the past, a cyberattack moved at the speed of a human typing. Today, attacks are automated. A "Zero-Day" exploit can compromise an entire network in minutes.

The Problem: By the time a human analyst wakes up, drinks their coffee, and looks at an alert, the data is already gone.

The Agentic Fix: An AI Agent doesn’t just "alert" a human. It sees the breach, realizes it’s a threat to its goal (keeping the server online), and autonomously quarantines the infected laptop or shuts down the compromised port in milliseconds.

2. Managing the "Alert Blizzard"

Modern security teams are drowning. A typical mid-sized company might get 10,000 security alerts a day. Most are "noise"—false alarms like a VPN glitch or a forgotten password.

The Problem: Human analysts get "alert fatigue." They start missing the real needle in the haystack because there is simply too much hay.

The Agentic Fix: Agentic AI acts as a Tier 1 Analyst. It doesn't just flag an alert; it investigates it. It can log into other systems, check if the "suspicious" user is actually on vacation, verify the file hash, and then—only if it’s truly dangerous—hand it to a human with a full report already written.

3. Dealing with "Shadow" Environments

Our digital world is no longer just "computers in an office." It’s cloud servers, remote laptops, smart fridges, and third-party APIs.

The Problem: Security teams can't be everywhere at once. There are too many "dark corners" where an attacker can hide.

The Agentic Fix: Agentic AI is decentralized. You can have dozens of small "sub-agents" living in different parts of your network. They talk to each other, share notes ("Hey, I saw a weird login on my end, did you?"), and coordinate a defense that covers the entire map, not just the front door.

4. Closing the "Talent Gap"

There is a global shortage of nearly 4 million cybersecurity professionals. We simply don't have enough humans to win this fight.

The Reality: We can't hire our way out of this.

The Agentic Fix: Agentic AI acts as a Force Multiplier. It handles the boring, repetitive, and high-speed "grunt work," allowing the few human experts we do have to focus on high-level strategy and complex "hunt" missions.

The Bottom Line: We aren't replacing humans; we are finally giving them teammates who can keep up with the speed of the modern internet.

Comparison: Traditional vs. Agentic Cybersecurity

| Feature | Traditional Security AI | Agentic AI (The New Way) |

|---|---|---|

| Action | Reactive (Wait for trigger) | Proactive (Pursues a goal) |

| Decision | "If-This-Then-That" rules | Multi-step reasoning & planning |

| Tool Use | Stays inside one app | Can use APIs, SSH, and Cloud tools |

| Learning | Needs manual updates | Learns and adapts from every "near miss" |

Comparison: Traditional vs. Agentic Defense

How Agentic AI Works in Cybersecurity: A Simple Model

To understand agentic AI security, imagine a system that works like a strong security analyst—but faster and always awake. An agentic model usually follows a loop: it observes signals, makes sense of the context, decides on a plan, uses tools to execute steps, and then learns from the outcome. This is why agentic AI is often described as “multi-step reasoning plus tool use.” It’s not magic; it’s a structured decision cycle designed for speed, scale, and continuous improvement.

The Agentic Security Loop: Observe → Reason → Act → Learn

In practice, an agent might observe a suspicious email, a new domain, a user report, or a risky click. Then it reasons: Is this actually malicious? Who else received it? What is the impact? Next, it acts by removing the threat, isolating exposure, or triggering a training intervention. Finally, it learns from “near misses,” false positives, and real incidents to improve future decisions. This loop is exactly what makes agentic AI a strong match for phishing prevention, incident response automation, and human-centric cyber defense.

Human-Centric Agentic Defense: The Missing Link in Cybersecurity

Most articles about agentic AI focus only on the SOC (Security Operations Center). But the truth is simple: many breaches still start with a human moment—clicking, replying, scanning a QR code, sharing a code, or trusting a voice. Human-centric defense means we don’t blame employees; we design systems that help them succeed. Agentic AI can do exactly that because it can respond to behavior in real time and personalize the next best action—whether that action is blocking a threat, warning a user, or delivering a short microlearning “nudge.”

The Human Risk Loop: Detect → Intervene → Train → Measure

A practical human-risk loop looks like this: detect risky behavior (or risky exposure), intervene quickly, provide targeted training, and measure improvement over time. This is where agentic AI becomes a behavior engine—not just a detection engine. Instead of waiting for annual training to change habits, you can reduce risk continuously with adaptive simulations, reporting workflows, and just-in-time learning. This approach supports real-world value because CISOs now look for: autonomous security awareness, AI-driven human risk management, and behavior-based cybersecurity.

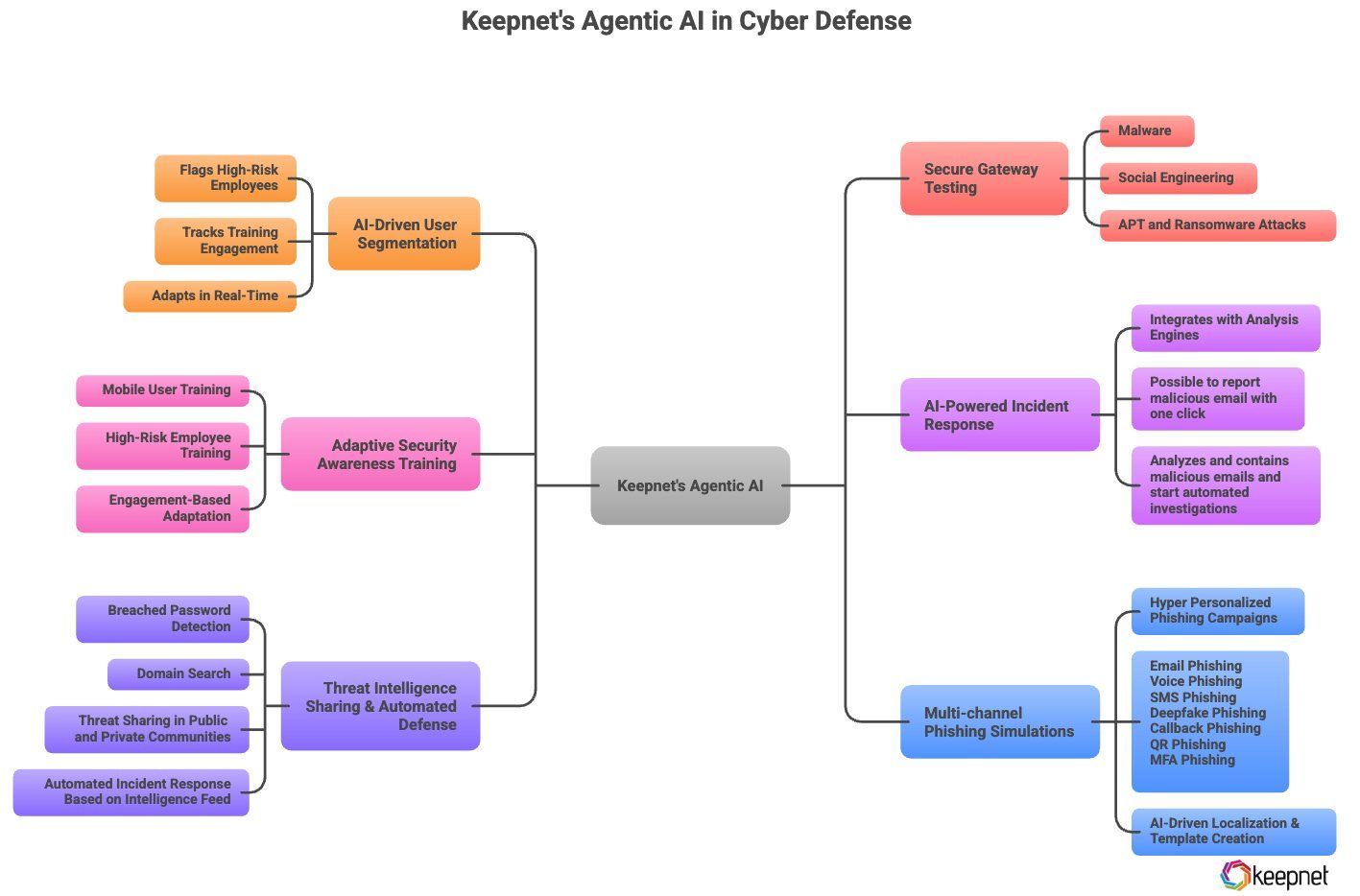

How Keepnet Leverages Agentic AI

We’ve woven Agentic AI into our Extended Human Risk Management Platform to revolutionize cyber defense.

Keepnet has integrated Agentic AI into its Extended Human Risk Management Platform to strengthen cybersecurity by automating threat detection, response, and prevention. Here’s how:

1. AI-Driven User Segmentation

Keepnet’s AI analyzes user behavior to identify security risks. It:

- Flags high-risk employees who frequently fall for phishing.

- Tracks training engagement and adjusts learning paths accordingly.

- Adapts in real-time based on roles, departments, and past interactions.

Forrester emphasizes that businesses should focus on applying AI to achieve specific cybersecurity outcomes, ensuring technology aligns with their core security objectives. (Source)

2. Multi- Channel Phishing Simulations

Keepnet Phishing Simulator uses AI to create customized phishing attacks based on user profiles. It mimics real-world threats, including:

- Email phishing

- Voice phishing (vishing)

- SMS-based phishing (smishing)

- QR code phishing (quishing)

- Callback phishing scams (callback)

- MFA Phishing Scams

This approach enhances employees' ability to detect and respond to phishing threats by exposing them to realistic, AI-generated attack simulations before they face actual cyber threats.

3. Adaptive Security Awareness Training

Traditional, one-size-fits-all security training is ineffective. Keepnet’s Security Awareness Training adapts to each employee’s behavior to improve learning and retention.

- Mobile users receive specialized training on QR phishing, smishing, and callback phishing to help them recognize and avoid common mobile-based threats.

- High-risk employees go through interactive, scenario-based training that simulates real-world cyberattacks and strengthens decision-making.

- Training content adapts based on engagement levels, ensuring employees who need extra support receive more targeted learning.

For a deeper look at how role-based security awareness training can be customized and adapted to different user needs, read Keepnet’s article on Role-Based Security Awareness Training.

4. AI-Powered Incident Response

Keepnet’s Incident Responder automates email threat detection and response.

- Integrates with 20+ analysis engines and SOAR solutions for advanced threat analysis.

- Detects zero-day attacks using AI-driven security measures.

- Scans 7,500 inboxes in 5 minutes for rapid threat removal.

- Supports Office 365, Google Workspace, and Exchange for seamless integration.

- Phishing Reporter add-in enables instant threat reporting by employees.

- Ensures privacy with no third-party data sharing and scans archives for hidden threats.

5. Threat Intelligence Sharing & Automated Defense

Keepnet’s Threat Intelligence detects breaches and enhances cybersecurity.

- Breached Password Detection: Identifies exposed employee credentials.

- Domain Search: Scans company and supply chain email addresses.

- Breach Insights: Provides details on compromised data.

- Seamless Integration: Connects with existing security tools and SIEM solutions.

- Continuous Monitoring: Alerts on potential breaches for ongoing protection.

- Privacy Assurance: Ensures encrypted, non-stored password searches.

6. Secure Gateway Testing with Email Threat Simulator

Instead of waiting for attacks, our Email Threat Simulator helps organizations:

- Identify security gaps before attackers exploit them.

- Simulate sophisticated phishing campaigns to assess security posture.

- Strengthen defenses through AI-driven analysis.

Agentic AI Risks & Ethical Considerations

While Agentic AI improves cybersecurity, it also presents new challenges. Cybercriminals are using AI to create more convincing phishing scams, deepfake attacks, and automated hacking techniques.

Key concerns include:

- Reliability Issues: AI can make errors in detecting cyber threats, leading to missed attacks or false alarms.

- Legal & Compliance Risks: Regulations like GDPR and CCPA require AI systems to be more transparent and accountable.

- Black-Box Concerns: AI decisions can be difficult to explain, making human oversight necessary to ensure fairness and accuracy.

As AI becomes more integrated into cybersecurity, organizations must address these risks to ensure safe and ethical use.

Top Agentic AI Use Cases in Cybersecurity (Beyond the SOC)

Agentic AI is powerful because it can operate across security domains, not only one tool. The biggest value appears when agents connect identity, email, endpoints, cloud, and user behavior—then act with clear goals like “reduce phishing success” or “contain exposure.” Below are high-impact use cases readers often search for, including agentic SOC topics and human-risk topics.

1) Agentic SOC Triage: Reduce alert fatigue with autonomous investigation

Instead of flooding analysts with raw alerts, agentic AI can triage and investigate—collect context, correlate signals, and deliver a clean “what happened + what to do next.” This supports search intent around “agentic SOC,” “AI SOC analyst,” and “autonomous threat investigation.” It also supports human teams by reducing burnout and improving response consistency.

2) Autonomous Phishing Containment: Remove threats fast, not hours later

With phishing, speed matters. A good agentic workflow can identify malicious messages, find all recipients, remove or quarantine emails, and trigger reporting + training sequences. This matches high-intent searches like “AI phishing response,” “automated email incident response,” and “phishing containment.”

3) Multi-Channel Social Engineering Defense: QR, SMS, voice, and MFA fatigue

Attackers don’t use one channel anymore. Agentic AI becomes more valuable when it supports defenses against smishing, quishing, vishing, callback scams, and MFA fatigue. This creates broad keyword coverage and positions Keepnet for “beyond email” awareness and simulation search terms.

4) Deepfake & Impersonation Readiness: Executive protection at human speed

Deepfake and impersonation attacks are growing because they exploit trust. Agentic AI can support detection signals, playbooks, and training interventions for executives and high-risk roles. This is perfect for a “human-centric defense” angle and supports searches like “deepfake phishing prevention” and “CEO fraud training.”

5) Compliance Evidence Automation: Audit-ready logs and proof of controls

Security and compliance teams spend too much time collecting evidence. Agents can help produce audit-ready logs, incident timelines, and training completion records—supporting searches like “AI for compliance automation” and “security audit evidence.”

How Keepnet Ensures AI Safety & Compliance

Keepnet follows a strict AI governance framework to minimize risks and ensure responsible AI use. Key measures include:

- Bias Reduction: AI models are continuously trained on diverse datasets to prevent biased decision-making.

- Transparency & Oversight: AI decisions are logged, reviewed, and validated with human oversight.

- Regulatory Compliance: Keepnet adheres to GDPR, CCPA, ISO/IEC 42001:2023, and emerging AI ethics standards.

These safeguards ensure AI remains accurate, fair, and aligned with security regulations.

To learn more about the safe use of AI in cybersecurity, read our guide on AI-driven Security Awareness Training.

Keepnet's ISO/IEC 42001:2023 Certification

Keepnet is committed to data privacy, AI governance, and cloud security, holding key ISO certifications that ensure compliance with global standards.

- ISO/IEC 42001:2023 – Establishes a framework for responsible AI management, covering ethics, risk management, transparency, and data integrity.

- ISO/IEC 27017 – Focuses on securing cloud environments, helping both cloud service providers and users reduce security risks.

- ISO/IEC 27018 – The first international standard for cloud data privacy, setting guidelines for protecting Personally Identifiable Information (PII).

These certifications reflect Keepnet’s commitment to security, regulatory compliance, and trust in AI-driven and cloud-based cybersecurity solutions.

To learn more, read Keepnet Achieves ISO 42001 - Certification For AI Management for details on how Keepnet ensures responsible AI management and compliance with global security standards.

Agentic AI Security Guardrails: A Practical Governance Checklist

Readers don’t only want a definition; they want guidance they can apply. A strong agentic AI deployment should include technical guardrails and governance guardrails. These controls reduce risk from mistakes, hallucinations, bias, and misuse—while keeping the benefits of speed and autonomy.

Minimum guardrails for safe autonomous security agents

Keepnet use least-privilege access for every integration. Keep a clear action boundary: allow the agent to investigate widely, but restrict high-impact actions unless policy conditions are met. We add human-in-the-loop approvals for sensitive steps (like account lockouts or large-scale deletes).

We log everything (inputs, actions, outputs) for auditability. Maintain an allowlist for tools and destinations. Finally, we run regular “agent red-team” testing to see how your agent behaves under manipulation, confusing inputs, or adversarial prompts.

Future Outlook: What’s Next for Agentic AI?

Gartner predicts that by 2028, one-third of AI interactions will involve autonomous agents completing tasks without human intervention. (Source) As AI advances, cybersecurity must adapt to:

- AI Battlefront: Defensive and malicious AI systems will continuously evolve, competing to outsmart each other.

- Tighter Regulations: Governments will introduce stricter AI laws, such as the AI Act and expanded CCPA, to ensure transparency and security.

- Smarter Cyber Threats: Hackers will create AI-driven attacks designed to bypass security protections.

- Automated Security: Organizations will rely on Agentic AI for 24/7 threat detection and response.

Keepnet has been named a go-to vendor for stopping deepfake and AI disinformation attacks by Gartner in 2025, and we build agentic AI capabilities inside our “Extended Human Risk Management Platform” to reduce real-world human risk. This is not only about detection—it’s about helping employees make safer decisions, faster incident response, and measurable improvements across phishing, smishing, vishing, quishing, and MFA fatigue scenarios.

Editor's note: This article was updated on December 29, 2025.